[Avg. reading time: 0 minutes]

[Avg. reading time: 0 minutes]

Disclaimer

[Avg. reading time: 4 minutes]

Required Tools

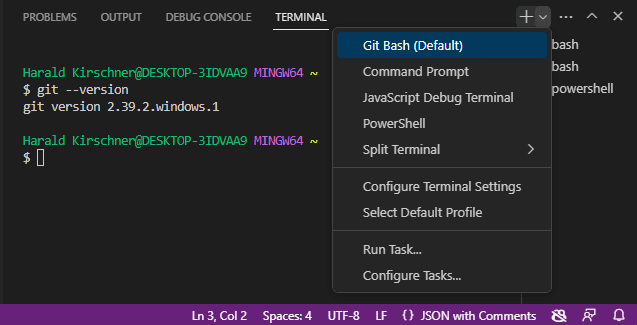

Windows

Mac

Common Tools (Windows & Mac)

-

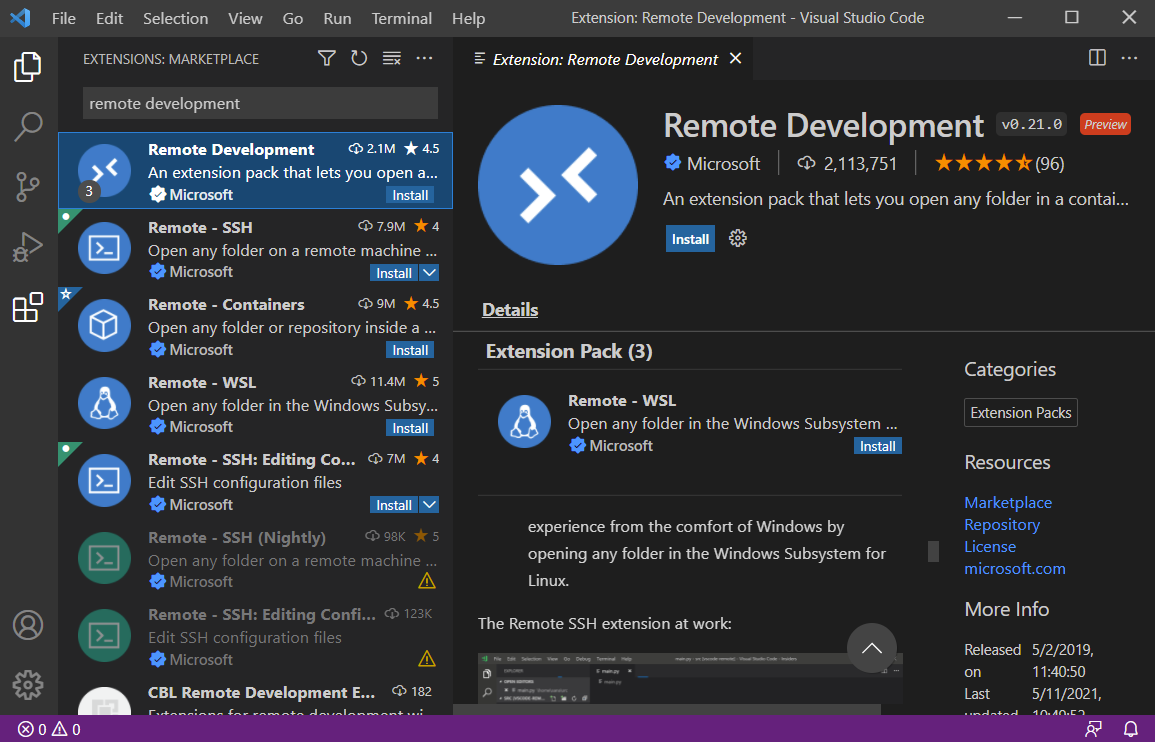

Install this VS Code Extension**

Remote Development

Configure Env using Dev Container

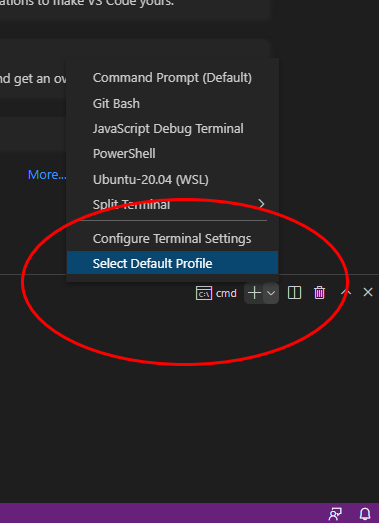

Goto Terminal / Command Prompt

git clone https://github.com/gchandra10/workspace-iot-upperstack.git

- Make sure Docker is running

- Open VSCode

- Goto File > Open Workspace from File

- Goto workspace-rust-de folder and choose the workspace.

- When VS Code prompts to “Reopen in Container” click it.

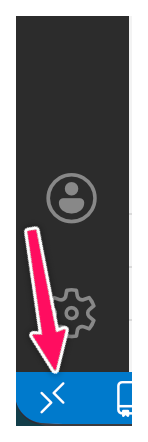

If VSCode doesnt prompt, then click the “Remote Connection” button at the Left Bottom of the screen.

Cloud Tools

[Avg. reading time: 1 minute]

Overview of IOT

- Introduction

- IoT Use Cases

- JOBS

- Computing Types

- Evolution of IOT

- Protocols

- IOT Stack Overview

- Lower Stack

- Upper Stack

- Puzzle

[Avg. reading time: 6 minutes]

Introduction

What is IoT

The Internet of Things is a system where physical objects are equipped with sensors, software, and network connectivity so they can collect data, communicate over the network, and trigger actions without continuous human involvement.

IoT is not just the device.

IoT is devices + data + connectivity + action.

Why IoT Matters

Operational Efficiency

- Automates repetitive and time sensitive tasks

- Reduces manual monitoring and human error

- Enables real time visibility into systems

Data Driven Decisions

- Sensors generate continuous time series data

- Decisions shift from intuition to measurable signals

- Analytics and ML sit on top of IoT, not the other way around

Quality of Life

- Healthcare monitoring, smart homes, traffic systems

- Problems are detected earlier, not after failure

- Convenience is a side effect, reliability is the real win

Economic Impact

- New products, new services, new pricing models

- Hardware vendors become data companies

- Entire industries move from reactive to predictive

What is not IOT

Devices that work only locally

- A USB temperature sensor dumping values to a laptop

- An electronic thermostat controlling temperature locally

- No network, no IoT

Systems with no outward data flow

- Hardware that performs an action but emits no telemetry

- If data never leaves the device, it is automation, not IoT

What MUST exist for something to be IoT

- Continuous or event based data generation

- Network communication

- Backend ingestion

- Storage, usually time series oriented

- Processing or decision making

- Optional but important feedback or control loop

Examples

Watch vs Smart Watch

CO Detector vs Smart CO Detector

- Senses CO locally

- Triggers a buzzer or alarm

- Operates entirely offline

vs

- Transmits CO readings or alarm events

- Uses a network to communicate

- Notifies an external system such as a phone app, home hub, or fire department

Read more

Local intelligence is embedded systems. Networked intelligence is IoT.

#IOT #Importance #smart #network

[Avg. reading time: 3 minutes]

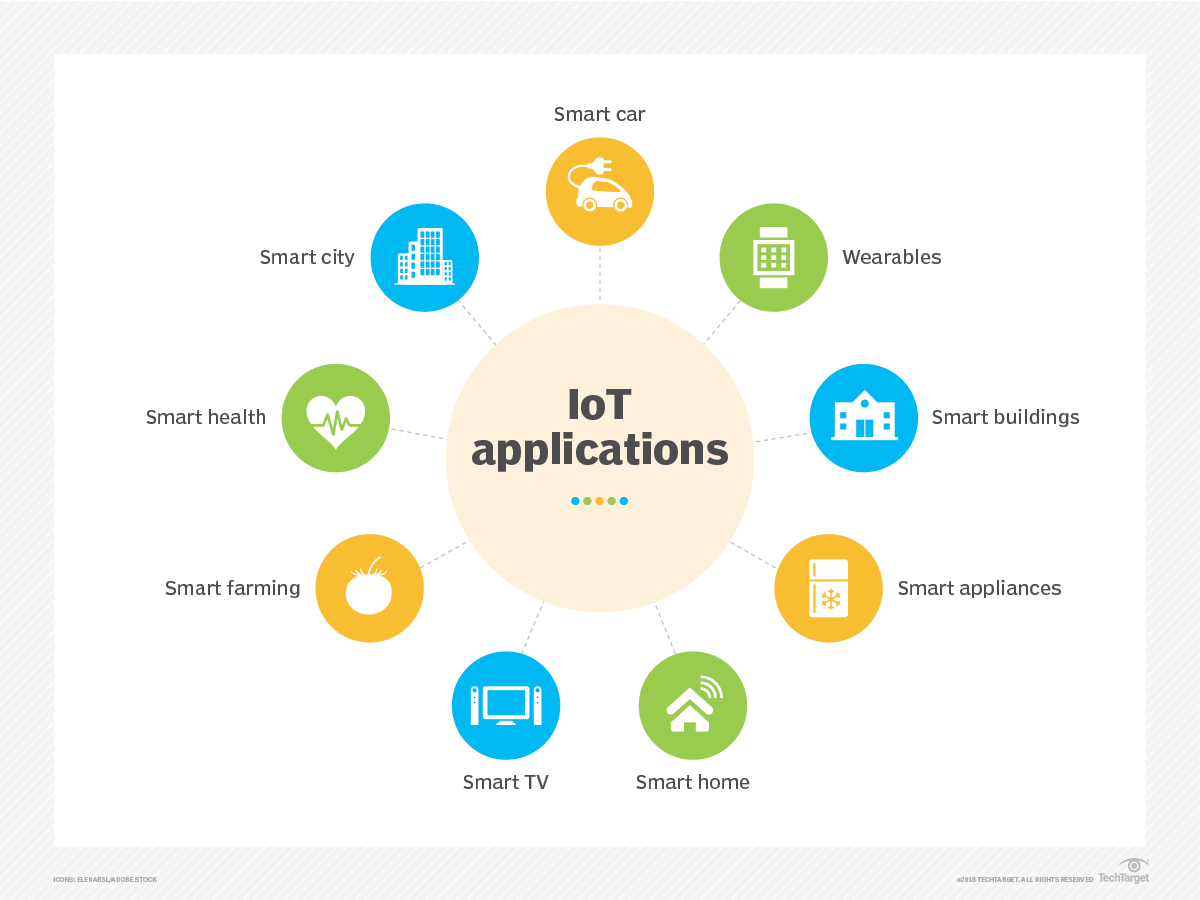

Use Cases

Every IoT use case follows the same pattern

sense → transmit → store → decide → act

1. Smart Homes

Use Case Home automation for comfort, security, and energy efficiency.

Example Smart thermostats like Nest adjust temperature based on occupancy and behavior. Smart locks and cameras like Ring stream events and alerts.

Temperature or motion sensed > data sent > rule applied > device reacts.

2. Healthcare

Use Case Remote patient monitoring and early intervention.

Example Wearables such as Fitbit and Apple Watch track vitals and activity and trigger alerts.

Vitals sensed > transmitted > analyzed > alert raised.

3. Industrial IoT (IIoT)

Use Case Predictive maintenance and factory automation.

Example Sensors monitor vibration, temperature, and pressure to predict failures before they occur using platforms like GE Predix.

Machine signals sensed > streamed > modeled > maintenance action triggered.

Similarly Smart Shelves inventory update, Amazon Go, Tesla Cars, Smart meters, Air Quality and so on.

Why IoT Works Across All Fields

- Sensors are cheap

- Networks already exist

- Storage is inexpensive

- Compute and analytics are mature

#iotusecases #logistics #environmental

[Avg. reading time: 3 minutes]

JOBS

| Role | What They Actually Do | Core Skills |

|---|---|---|

| IoT Application Developer | Build web or mobile apps that display IoT data and trigger actions | APIs, REST, MQTT, Web or Mobile frameworks |

| IoT Solutions Architect | Design the full IoT system from devices to cloud and apps | Architecture, cloud IoT services, security |

| Cloud Integration Engineer | Connect devices to cloud storage, pipelines, and services | AWS or Azure, MQTT, REST, data pipelines |

| IoT Data Analyst | Analyze sensor and event data to extract insights | Python, SQL, time series data, dashboards |

| IoT Product Manager | Decide what gets built and why from a business angle | Product thinking, requirements, communication |

| IoT Security Specialist | Secure data, APIs, devices, and cloud integrations | Encryption, auth, IAM, threat modeling |

| IoT Test Engineer | Validate reliability, scale, and failure scenarios | Testing, automation, system validation |

| IoT Support or Operations | Keep systems running and debug failures | Monitoring, logs, troubleshooting |

#jobs #iotdevelopers #iotarchitects #dataecosystem

[Avg. reading time: 15 minutes]

Computing Types

Modern software systems use different computing approaches depending on where computation happens, how systems are structured, and when decisions are made.

There is no single “best” computing model. Each type exists to solve a specific class of problems related to scale, latency, reliability, cost, and complexity.

As systems evolved from single machines to globally distributed platforms and IoT systems, computing models also evolved:

- From centralized to distributed

- From monolithic to microservices

- From cloud-only to edge and fog

- From reactive to proactive

Understanding these computing types helps you:

- Choose the right architecture for a problem

- Understand why IoT systems cannot rely on cloud alone

- See how modern data and IoT platforms fit together

Centralized Computing

Centralized Computing

Single computer or location handles all processing and storage. All resources and decisions are managed from one central point.

Characteristics

- Single point of control

- Centralized decision making

- Consistent data

- Simpler security

- Easier maintenance

Examples

- Traditional banking systems

- Library systems

- School management systems

Typical setup

- Central server or mainframe

- All branches connect to HQ

- Single database

- Centralized processing

- One place for updates

Major drawback

- Single point of failure

Distributed Computing

Multiple computers work together as one logical system. Processing, storage, and management are spread across multiple machines or locations.

Characteristics

- Shared resources

- Fault tolerance

- High availability

- Horizontal scalability

- Load balancing

Example

- Google Search

- Multiple data centers

- Distributed query processing

- Replication and redundancy

Monolithic

Single application where all functionality is packaged into one codebase.

Characteristics

- One deployment unit

- Shared database

- Tightly coupled components

- Single technology stack

- All-or-nothing scaling

Advantages

- Simple to build

- Easy to deploy

- Good performance

- Lower initial cost

Disadvantages

- Hard to scale selectively

- Technology lock-in

Examples

- WordPress

- Early-stage applications (many start monolithic)

Microservices

Application built as independent, small services that communicate via APIs.

Characteristics

- Independent services

- Separate databases (often)

- Loosely coupled

- Different tech stacks possible

- Individual scaling

Advantages

- Scale only what is needed

- Team autonomy

- Technology flexibility

Disadvantages

- Operational overhead

- Higher complexity

- Latency and distributed failures

- Tooling sprawl if unmanaged

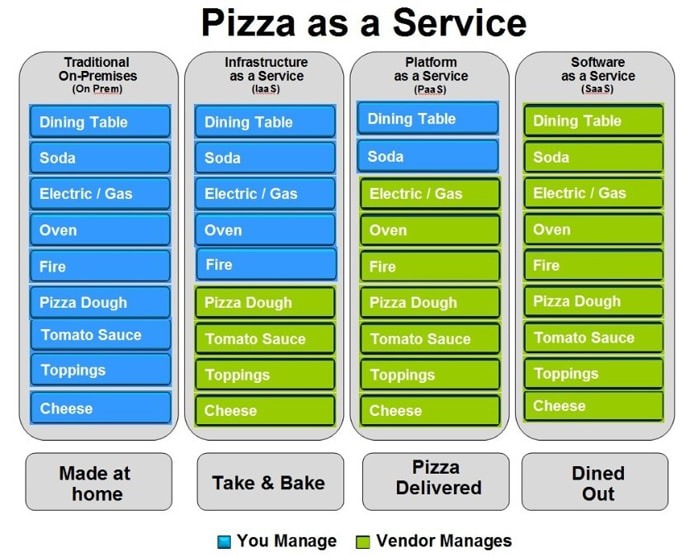

Cloud Computing

Cloud computing provides compute resources (servers, storage, databases, networking, software) over the internet with pay-as-you-go pricing.

Benefits

- Cost savings

- No upfront infrastructure

- Pay for usage

- Reduced maintenance

- Scalability

- Scale up or down on demand

- Handle traffic spikes

- Accessibility

- Access from anywhere

- Global reach

- Reliability

- Backups and disaster recovery

- Multi-region options

- Automatic updates

- Security patches

- Managed services reduce ops work

Examples

- Cloud storage

- OTT streaming platforms

Service Models

- SaaS (Software as a Service)

- Ready-to-use apps

- Examples: Gmail, Dropbox, Slack

- PaaS (Platform as a Service)

- App runtime and developer platforms

- Examples: Heroku, Google App Engine

- IaaS (Infrastructure as a Service)

- Compute, network, storage building blocks

- Examples: AWS EC2, Azure VMs

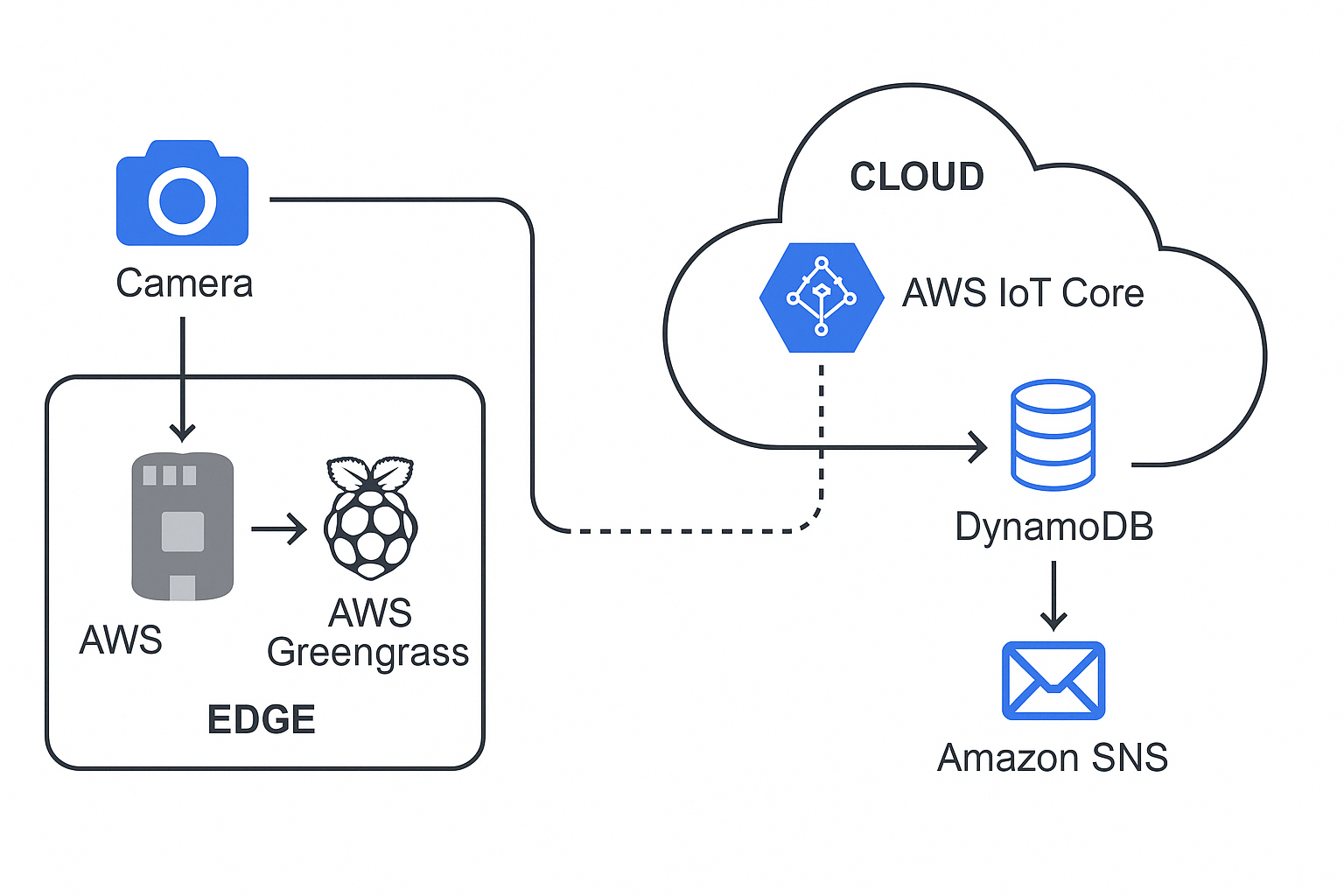

Edge Computing

Edge computing moves computation and storage closer to where data is generated, near or on IoT devices.

Benefits

- Lower latency

- Works with limited internet

- Reduces bandwidth cost

- Better privacy (data stays local)

Simple examples

- Smart camera doing motion detection locally

- Smart thermostat adjusting temperature locally

- Factory robot making real-time decisions from sensors

Examples

- Smart Home Security

- Local video processing

- Only sends alerts or clips to cloud

- Tesla cars

- Local sensor fusion and obstacle detection

- Split-second decisions on device

Fog Computing

What it does

- Aggregates data from multiple edge devices

- Provides more compute than individual devices

- Filters and enriches data before sending to cloud

- Keeps latency lower than cloud-only systems

Examples

- Smart building local server processing many sensors

- Factory gateway analyzing multiple machines

- Farm gateway coordinating multiple sensors and controllers

Cloud vs Edge vs Fog

| Aspect | Cloud | Edge | Fog |

|---|---|---|---|

| Location | Central data centers | On/near device | Local network |

| Latency | High | Very low | Medium |

| Compute | Very high | Low | Medium |

| Storage | High | Very limited | Limited |

| Internet dependency | Required | Optional | Local network required |

| Data scope | Global | Single device | Multiple local devices |

| Typical use | Analytics, long-term storage | Real-time decisions | Aggregation, coordination |

| Example | AWS | Smart camera | Factory gateway |

Computing Evolution

Manual Computing

Calculations and decisions performed by humans.

Drawbacks

- Slow

- Error-prone

- Not scalable

Automated Computing

Computers execute workflows with minimal human involvement.

- Faster processing

- Higher accuracy

- Efficient resource use

Reactive Computing

System responds after events happen.

Examples

- Incident response

- Support tickets

- After-the-fact troubleshooting

Proactive Computing

System predicts and acts before failures happen.

Examples

- Predictive maintenance

- Capacity planning

- Anomaly detection

Idea

- Prevention is better than cure

Remember the saying “Prevention is better than cure”

[Avg. reading time: 10 minutes]

Evolution of IoT

IoT evolved from isolated device communication to distributed, event-driven systems where intelligence is shared across edge, fog, and cloud.

Early Phase (2000–2010): Machine-to-Machine Era

Characteristics

- Direct device-to-system communication

- Mostly industrial use cases

- Proprietary protocols

- Vendor-locked implementations

Limitations

- No standardization

- Poor interoperability

- High cost

- Difficult to scale

Example: OnStar Vehicle Communication

- Direct vehicle to control-center connection

- Proprietary cellular network

- Centralized command system

Capabilities

- Emergency alerts

- Vehicle tracking

- Remote diagnostics

Limitations

- Closed ecosystem

- Single-vendor dependency

- High operational cost

Implementation: General Motors’ OnStar system (2000s)

Initial IoT Phase (2010–2015): Three-Layer Architecture

Architecture Layers

Perception Layer

- Sensors and actuators

- Data collection from physical world

Network Layer

- Connectivity

- Data transmission

Application Layer

- Basic analytics

- Visualization

- User interfaces

Key Advances

- Cloud computing adoption

- Open protocols emerge

- Improved interoperability

Example 1: Nest Learning Thermostat (1st Generation)

- Temperature and motion sensors

- Wi-Fi connectivity

- Cloud-backed mobile application

Impact

- Mainstream smart home adoption

- Remote monitoring and automation

Intermediate Phase (2015-2018): Five-Layer Architecture

The five-layer model emerged because cloud-only processing could not meet latency, scale, and enterprise integration needs.

Additional Layers

- Transport Layer: reliable data movement

- Processing Layer: analytics and rule engines

- Business Layer: enterprise integration and monetization

Improvements

- Better security models

- Edge computing introduced

- Improved scalability

- Structured data management

Example: Smart City - Barcelona

Architecture

- City-wide sensor networks

- High-speed transport networks

- Central data platforms

- Multiple city applications

- Business and governance layer

Results

- Reduced water consumption

- Improved traffic flow

- Optimized waste management

Modern Phase (2018-Present): Service-Oriented Architecture

Core Characteristics

- Microservices-based systems

- Edge–Cloud continuum

- Event-driven architecture

- Zero-trust security

- AI and ML integration

Key Capabilities

Distributed Intelligence

- Edge processing

- Fog computing

- Autonomous decision-making

Advanced Integration

- API-first design

- Event mesh

- Digital twins

Security

- Identity-based access

- End-to-end encryption

- Continuous threat detection

Scalability

- Containers

- Serverless computing

- Auto-scaling

Example: Tesla Vehicle Platform

Architecture

- Edge computing inside vehicles

- Cloud-based OTA updates

- AI-driven autopilot

- Digital vehicle twins

Impact

- Continuous improvement

- Predictive maintenance

- Fleet-level intelligence

Example : Amazon Go Stores

Technologies

- Computer vision

- Sensor fusion

- Edge AI

- Deep learning

Results

- Cashierless retail

- Reduced operational cost

- Improved customer experience

Emerging Trends in IoT

Autonomous IoT

- Self-healing systems

- Self-optimizing networks

- Cognitive decision-making

Sustainable IoT

- Energy-efficient design

- Green computing

- Resource optimization

Resilient IoT

- Fault tolerance

- Disaster recovery

- Business continuity

Example: Smart Agriculture

- Autonomous machinery

- Drone integration

- Soil and weather sensors

- Precision farming

Example: Smart Grids

- Grid sensors

- Smart meters

- Edge intelligence

- Automated fault recovery

- Demand response

Key Architectural Shifts Over Time:

- From Centralized → Distributed

- From Monolithic → Microservices

- From Cloud-centric → Edge-centric

- From Static → Dynamic

- From Manual → Automated

- From Reactive → Proactive

Impact on Design Considerations

Scalability

- Vertical → Horizontal

- Static → Elastic

Security

- Perimeter-based → Zero trust

- Reactive → Preventive

Integration

- Point-to-point → Event-driven

- Tight coupling → Loose coupling

Operations

- Manual → Automated

- Centralized → Distributed

[Avg. reading time: 14 minutes]

Protocols

A protocol in the context of computing and communications refers to a set of rules and conventions that dictate how data is transmitted and received over a network. Protocols ensure that different devices and systems can communicate with each other reliably and effectively. They define the format, timing, sequencing, and error checking mechanisms used in data exchange.

Importance of Protocols

Interoperability: Allows different systems and devices from various manufacturers to work together.

Reliability: Ensures data is transmitted accurately and efficiently.

Standardization: Provides a common framework that developers can follow, leading to consistent implementations.

Commonly used Protocols

HTTP (HyperText Transfer Protocol): Used for transmitting web pages over the internet.

FTP (File Transfer Protocol): Used for transferring files between computers.

TCP/IP (Transmission Control Protocol/Internet Protocol): A suite of communication protocols used to interconnect network devices on the internet.

UDP (User Datagram Protocol): UDP, or User Datagram Protocol, is a communication protocol used across the Internet. It is part of the Internet Protocol Suite, which is used by networked devices to send short messages known as datagrams but with minimal protocol mechanisms. Used in VoIP & Live Streaming.

Key Characteristics of Protocols

Syntax:

Defines the structure or format of the data.

Example: How data packets are formatted or how headers are structured.

Semantics:

Describes the meaning of each section of bits in the data.

Example: What specific bits represent, such as addressing information or control flags.

Timing:

Controls the sequencing and speed of data exchange.

Example: When data should be sent, how fast it should be sent, and how to handle synchronization.

Popular IoT Protocols

1. Bluetooth

Description: A short-range wireless technology standard used for exchanging data between fixed and mobile devices. Its a Key protocol in the IoT ecosystem.

Use Cases:

- Wearable devices (e.g., fitness trackers, smartwatches)

- Wireless peripherals (e.g., keyboards, mice, headphones)

- Home automation (e.g., smart locks, lighting control)

- Health monitoring devices

2. Zigbee

Description: A low-power, low data rate wireless mesh network standard ideal for IoT applications. It can handle larger networks in 1000’s of nodes compared to Bluetooch with a limit of 5 to 30 devices. Lower Latency compared to Bluetooth. Needs a hub / controller to communicate. (Google Nest, Apple HomePod)

Use Cases:

- Smart home devices (e.g., smart bulbs, thermostats, security systems)

- Industrial automation

- Smart energy applications (e.g., smart meters)

- Wireless sensor networks

3. NFC (Near Field Communication)

Description: Direct Peer to Peer communication system. A set of communication protocols for communication between two electronic devices over a distance of 4 cm (1.6 in) or less. No pairing or controller is needed.

Use Cases:

- Contactless payments (e.g., Apple Pay, Google Wallet)

- Access control (e.g., NFC-enabled door locks, Yubi Keys)

- Data exchange (e.g., transferring contacts, photos)

- Smart posters and advertising

Payment Terminal

Phone → Terminal (direct) Terminal → Payment processor (separate connection)

Door Access

Card → Reader (direct) Reader → Access control system (separate connection)

4. LoRaWAN (Long Range Wide Area Network)

Description: A low-power, long-range wireless protocol designed for IoT applications.

Use Cases:

- Smart cities (e.g., parking sensors, street lighting)

- Agriculture (e.g., soil moisture sensors)

- Asset tracking

- Environmental monitoring

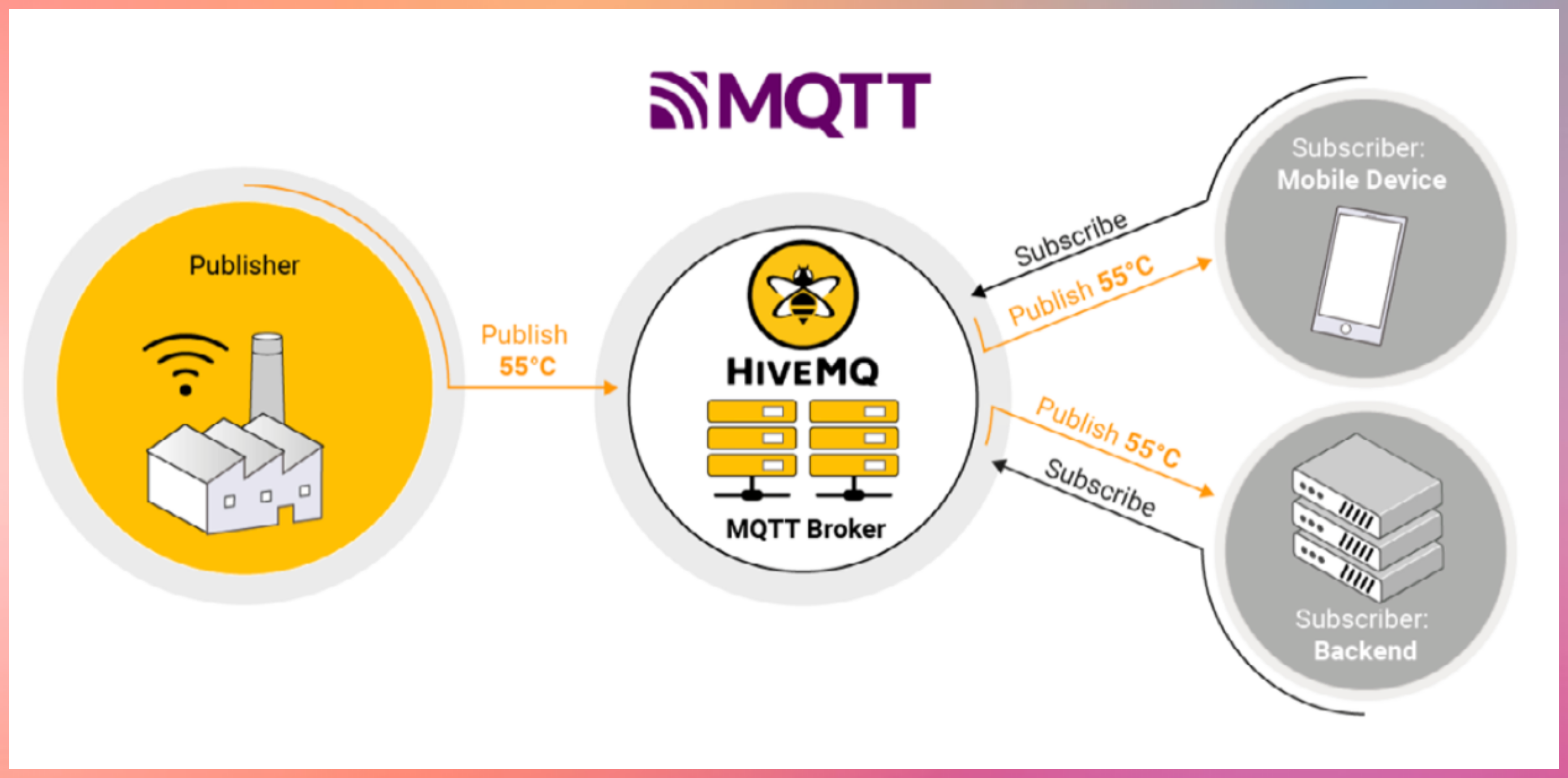

5. MQTT (Message Queuing Telemetry Transport)

Description: A lightweight messaging protocol for small sensors and mobile devices optimized for high-latency or unreliable networks.

- It’s a lightweight messaging protocol designed for devices with limited resources

- Works like a postal service for IoT devices

- Uses a publish/subscribe model instead of direct device-to-device communication

- Perfect for IoT because it’s:

- Low bandwidth

- Battery efficient

- Reliable even with poor connections

Use Cases:

- Home automation (e.g., smart home controllers)

- Industrial automation.

- Telemetry data collection.

- Remote monitoring.

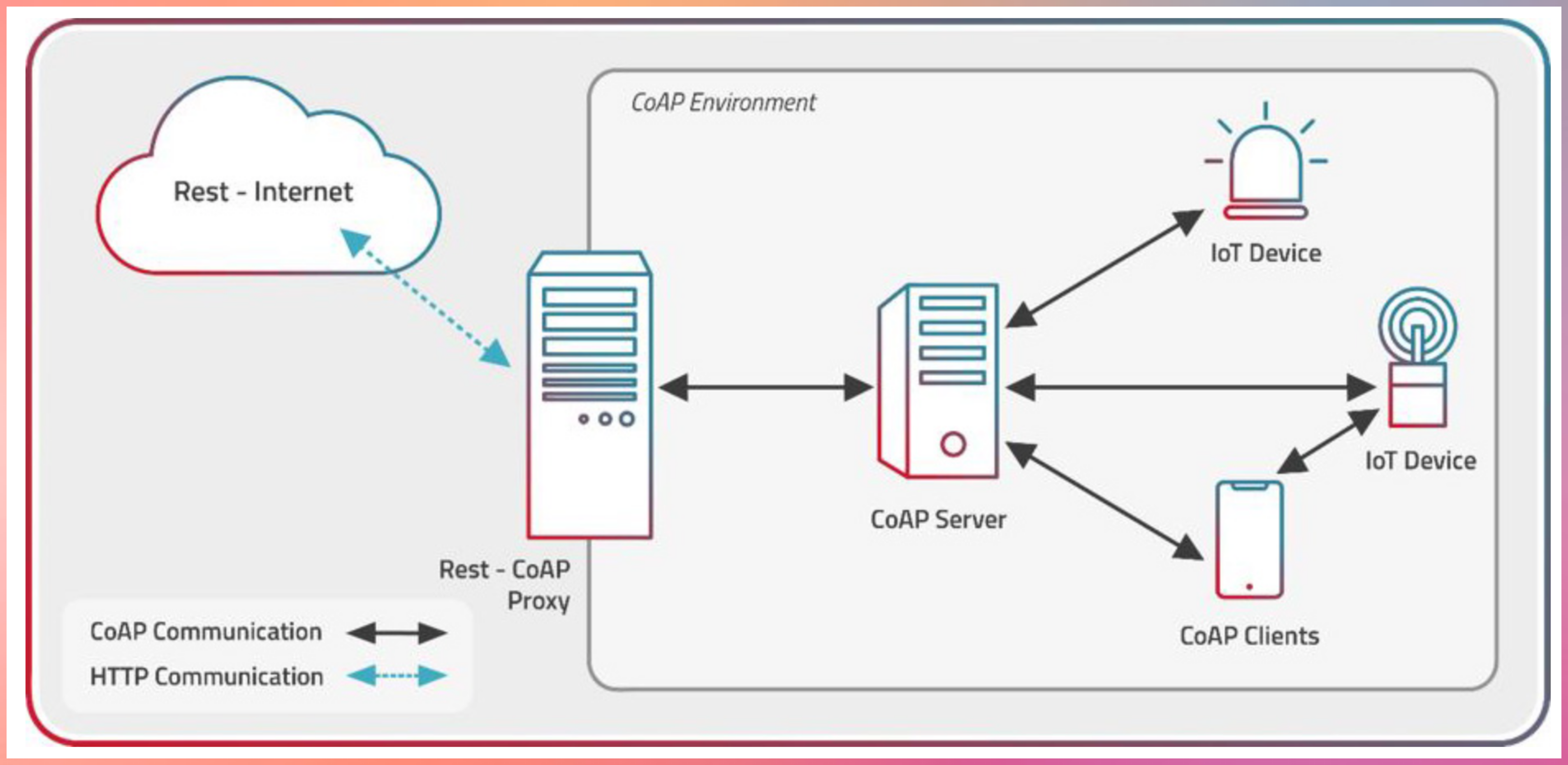

6. CoAP (Constrained Application Protocol)

Description: A specialized web transfer protocol for use with constrained nodes and networks in the IoT.

Key Characteristics

- It’s a specialized web transfer protocol for resource-constrained IoT devices

- Works similarly to HTTP but optimized for IoT needs

- Uses UDP (User Datagram Protocol) instead of TCP, making it lighter and faster

- Built for machine-to-machine (M2M) applications

Use Cases:

- Smart energy and utility metering

- Building automation

- Environmental monitoring

- Resource-constrained devices

Main Features

- Built-in Resource Discovery

- Support for multicast and broadcast messages

- Simple proxy and caching capabilities

- Low overhead and parsing complexity

- Asynchronous message exchange

- URI support similar to HTTP (coap://endpoint/path)

Apart from this there are few more Z-Wave, LTE-M, RFID

[Avg. reading time: 4 minutes]

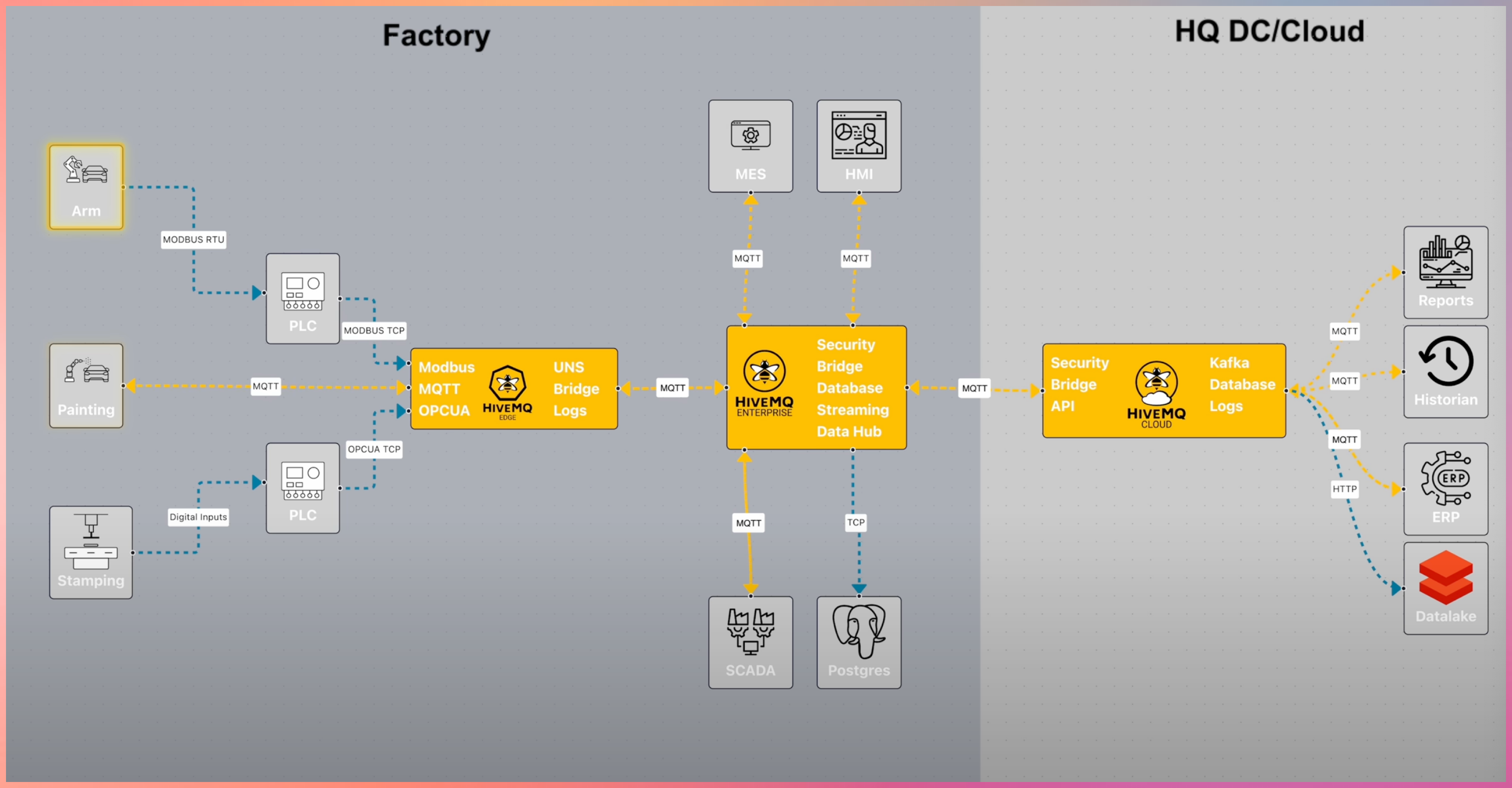

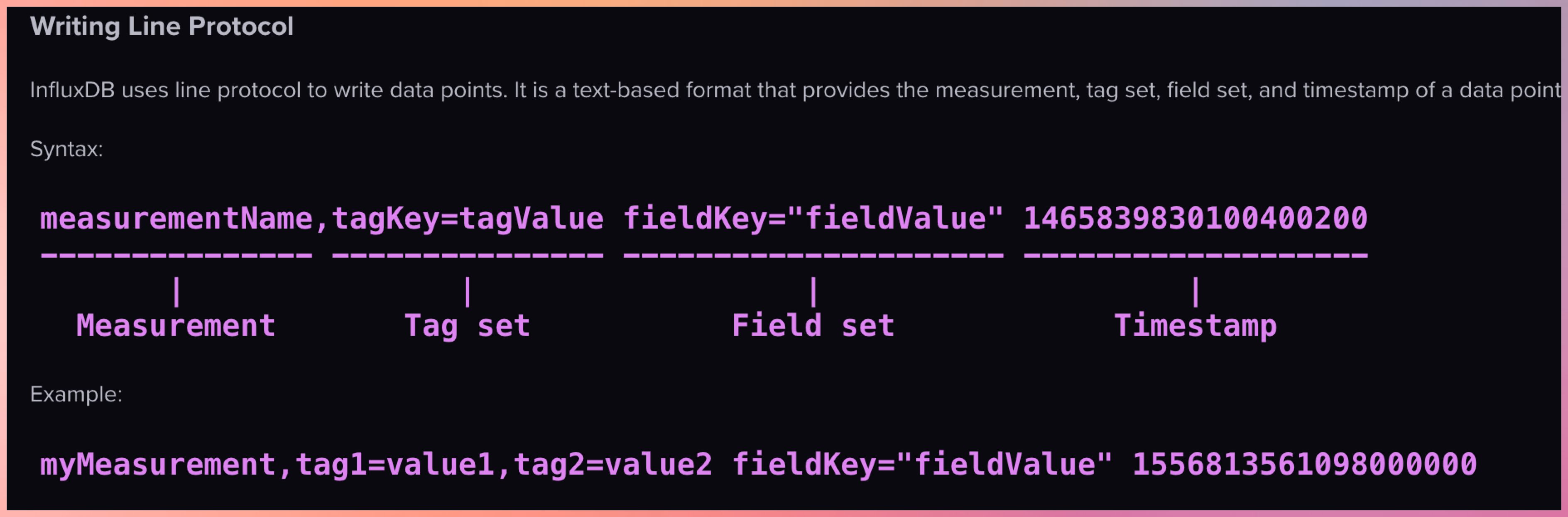

IoT Protocol Stack Overview

Many IoT protocols span multiple layers.

This stack is a conceptual view used to understand responsibilities, not a strict OSI mapping.

| Layer | Purpose | Examples |

|---|---|---|

| Physical Layer | Handles hardware-level transmission such as sensors, actuators, radios, and modulation. | LoRa, BLE (PHY), Zigbee (PHY), Wi-Fi, Cellular (NB-IoT, LTE-M) |

| Data Link Layer | Manages MAC addressing, framing, error detection, and local delivery. | IEEE 802.15.4, BLE Link Layer, LoRaWAN |

| Network Layer | Handles addressing and routing across networks (IP or adapted IP). | IPv6, 6LoWPAN, RPL |

| Transport Layer | Provides end-to-end data delivery and reliability where required. | UDP, TCP |

| Security Layer | Ensures encryption, authentication, and integrity. | DTLS, TLS |

| Application Layer | Defines messaging, device interaction, and application semantics. | MQTT, CoAP, HTTP, LwM2M, AMQP |

IoT Stack Preferred Languages

| Stack Layer | Preferred Languages | Why |

|---|---|---|

| Lower Stack (Firmware / Device) | C / C++ / Rust (emerging) | Direct hardware access, deterministic performance, low memory footprint, real-time constraints, zero-cost abstractions. |

| Middle Stack (Gateway / Edge) | Rust / Python | Protocol translation, buffering, edge analytics, balance of performance and developer productivity. |

| Upper Stack (Cloud / Data) | Rust / Python | Large-scale data processing, APIs, stream processing, ML orchestration, cloud-native services. |

[Avg. reading time: 3 minutes]

Layers of IoT - Lower Stack

IoT architecture typically consists of several layers, each serving a specific function in the overall system. These layers can be broadly divided into the lower stack and the upper stack.

The lower stack focuses on the physical and network aspects of IoT systems. It includes the following layers:

Physical Devices and Sensors:

Devices and sensors that collect data from the environment. Examples: Smart thermostats, industrial sensors, wearable health monitors.

Device Hardware and Firmware:

Microcontrollers, processors, and firmware that manage device operations. Ensures proper functioning and communication of IoT devices.

Connectivity and Network Layer:

Communication protocols (Wi-Fi, Bluetooth, Zigbee, LoRaWAN, etc.) that transmit data. Network hardware like routers and gateways that facilitate data transmission.

Edge Computing:

Edge devices that process data locally to reduce latency and bandwidth usage. Edge analytics for real-time decision-making without relying on cloud processing.

Power Management:

Battery technologies and energy harvesting methods to power IoT devices. Ensures prolonged operational life of remote and portable devices.

[Avg. reading time: 5 minutes]

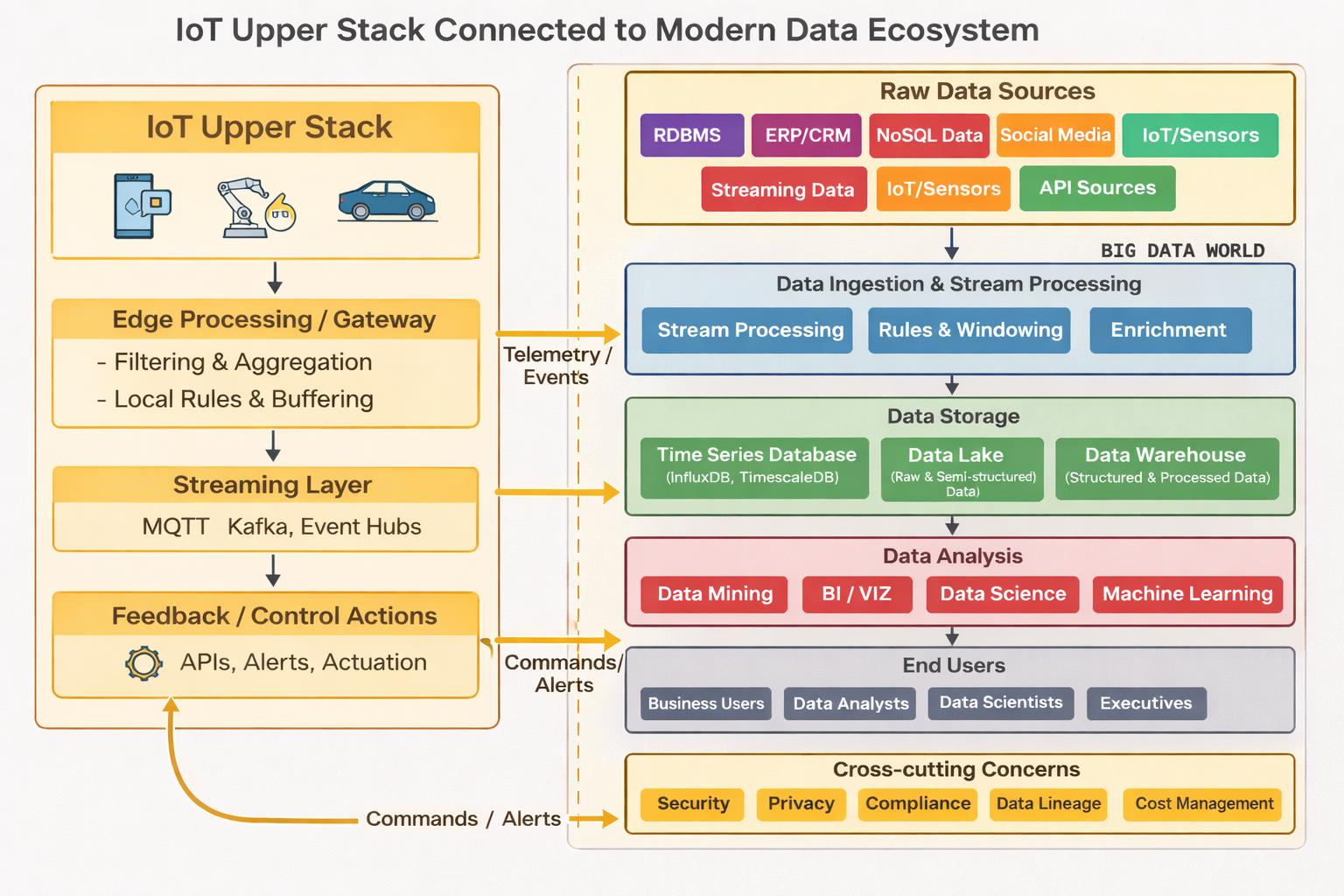

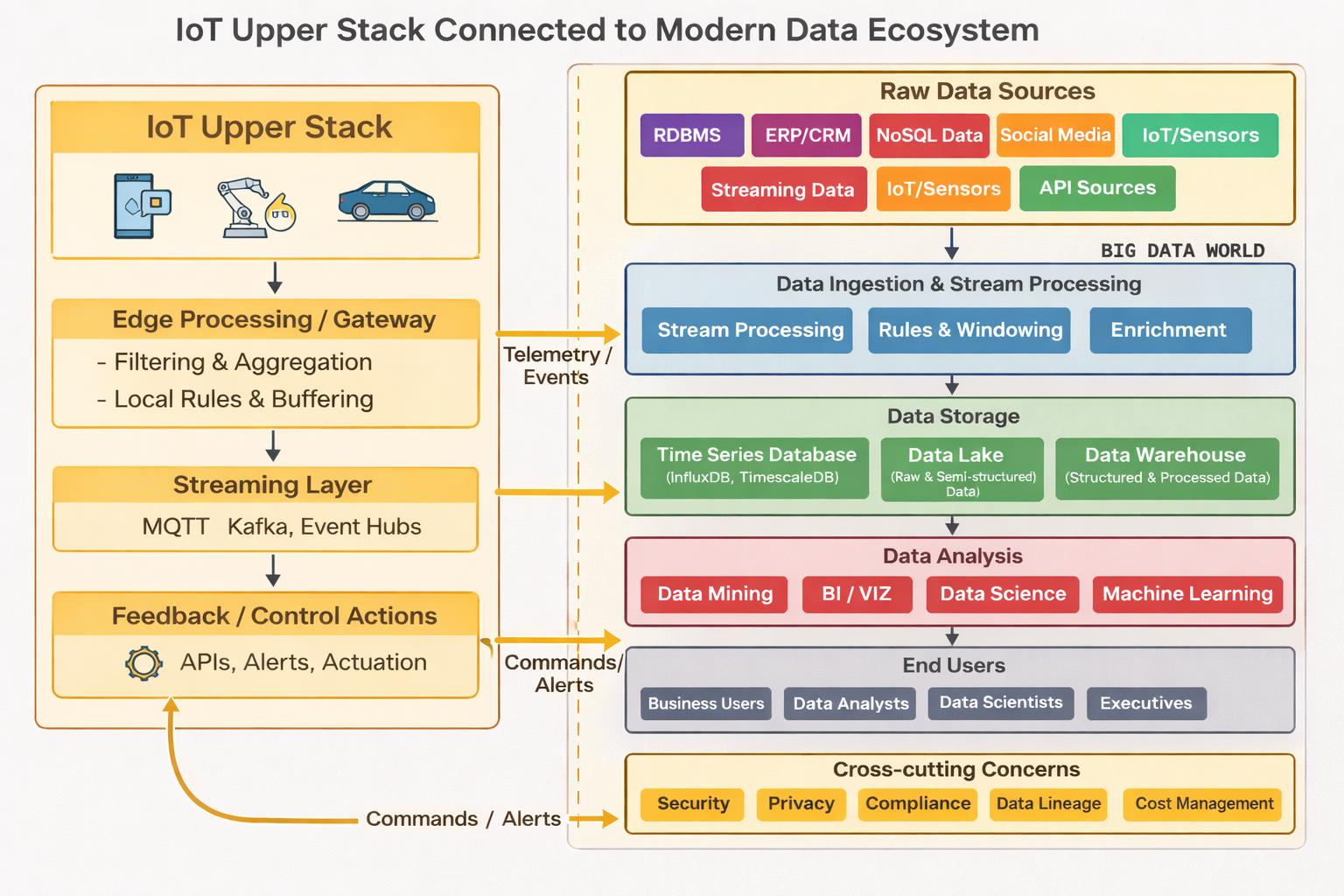

Layers of IoT - Upper Stack

IoT architecture typically consists of several layers, each serving a specific function in the overall system. These layers can be broadly divided into the lower stack and the upper stack.

The upper stack deals with application, data processing, and user interaction aspects of IoT systems. It includes the following layers:

Data Ingestion Layer

- Different Data formats (JSON, Binary)

- Message Brokers and queuing systems (RabbitMQ, Apache Kafka)

Data Processing & Storage

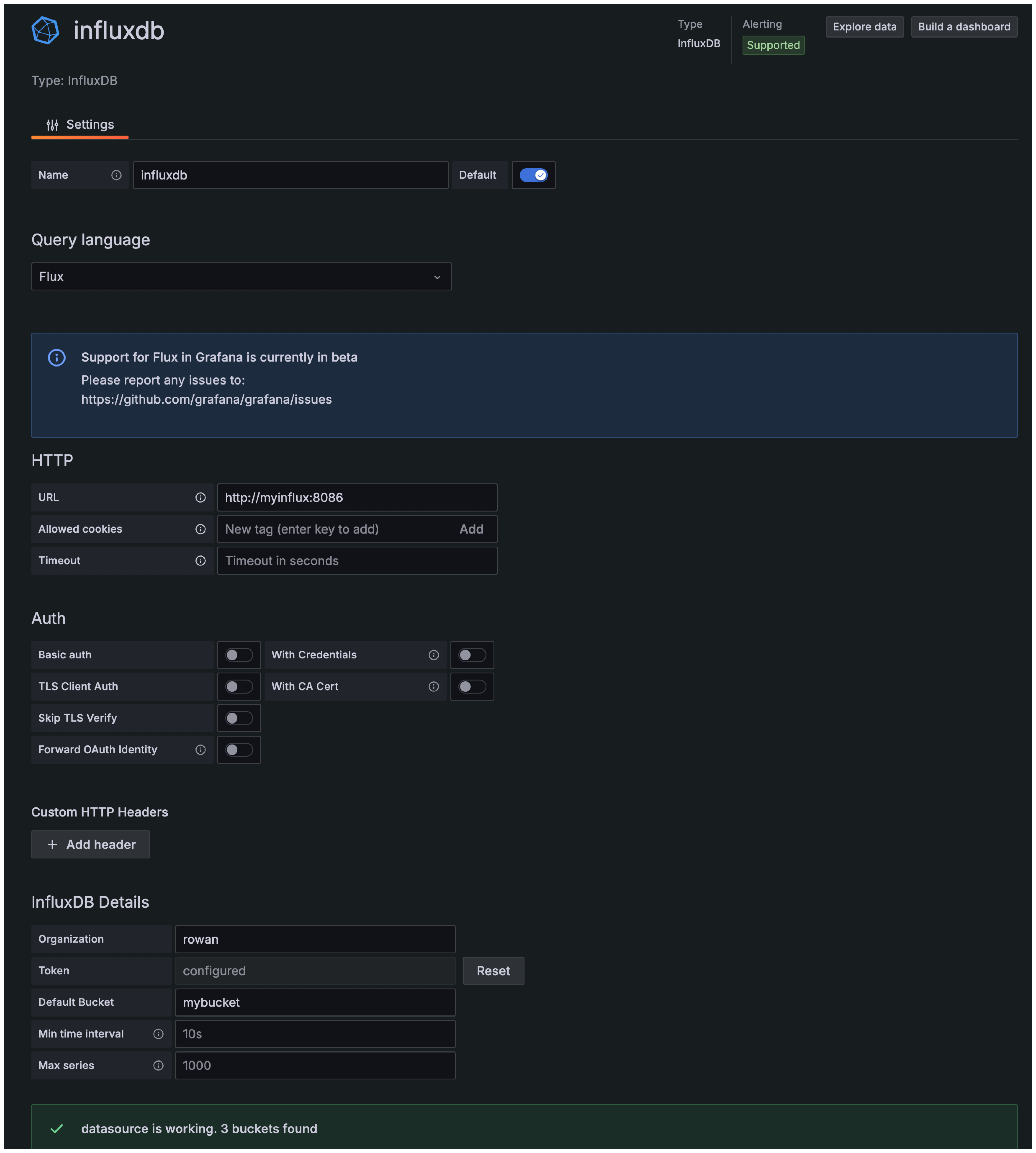

- Time Series Databases like InfluxDB / TimescaleDB.

- Hot vs Cold storage strategies.

- Data aggregation techniques.

- Edge vs Cloud processing decisions.

Analytical Layer

- Realtime analytics

- Vizualization frameworks and tools

- Anomaly detection systems

Application Interface / Enablement

- API (RESTful services)

- User authentication / authorization

Enterprise Integration

- Data transformation and mapping

- Integration with legacy systems

#upperstack #data #integrationlayer

[Avg. reading time: 3 minutes]

Puzzle

1. For each of the following IoT components, identify whether it belongs to the upper stack or the lower stack and explain why.

-

1.1. A mobile app that allows users to control their home lighting system.

-

1.2. A sensor that measures soil moisture levels in a farm.

-

1.3. A gateway that translates Zigbee protocol data to Wi-Fi for transmission to the cloud.

-

1.4. A cloud-based analytics platform that processes data from smart meters.

-

1.5. Firmware running on a smart thermostat that controls HVAC systems.

2. Determine whether the following statements are true or false.

-

2.1 Edge computing is part of the upper stack in IoT systems.

-

2.2 User authentication and data encryption are important aspects of the lower stack.

-

2.3 A smart refrigerator that sends notifications to your phone about expired food items involves both upper and lower stack components.

-

2.4 Zigbee and Bluetooth are commonly used for high-bandwidth IoT applications.

-

2.5 Predictive maintenance in industrial IoT primarily utilizes data from the upper stack.

[Avg. reading time: 2 minutes]

Data Processing

[Avg. reading time: 5 minutes]

Application Layer

Application Protocols

Lightweight protocols designed for IoT communication:

MQTT (Message Queuing Telemetry Transport):

Device → MQTT Broker → Server

Publish-subscribe model over TCP/IP.

Ideal for unreliable networks (e.g., remote sensors).

CoAP (Constrained Application Protocol):

RESTful, UDP-based protocol for low-power devices.

Features: Observe mode, resource discovery, DTLS security.

HTTP/HTTPS:

Used for cloud integration (less efficient than CoAP/MQTT).

LwM2M (Lightweight M2M):

Device management protocol built on CoAP.

Data Formats

JSON: Human-readable format for APIs and web services.

CBOR (Concise Binary Object Representation): Binary format for efficiency (used with CoAP).

XML: Less common due to larger payload size.

APIs and Services

RESTful APIs: Enable integration with cloud platforms (e.g., AWS IoT, Azure IoT).

WebSocket: Real-time bidirectional communication.

Device Management: Firmware updates, remote configuration (via LwM2M).

Security Mechanisms

DTLS (Datagram TLS): Secures CoAP communications.

TLS/SSL: Used for MQTT and HTTP.

Authentication: OAuth, API keys, X.509 certificates.

Why the Application Layer Matters

Efficiency: Protocols like CoAP minimize overhead for low-power devices.

Scalability: Supports thousands of devices in large-scale deployments.

Interoperability: Enables integration with existing web infrastructure (e.g., HTTP).

Security: Ensures data integrity and confidentiality in sensitive applications.

Challenges in IoT Application Layers

Fragmentation: Multiple protocols (CoAP, MQTT, HTTP) complicate interoperability.

Resource Constraints: Limited compute/memory on devices restricts protocol choices.

Latency: Real-time applications require optimized data formats and protocols.

#applicationlayer #protocols #formats #api #services

[Avg. reading time: 18 minutes]

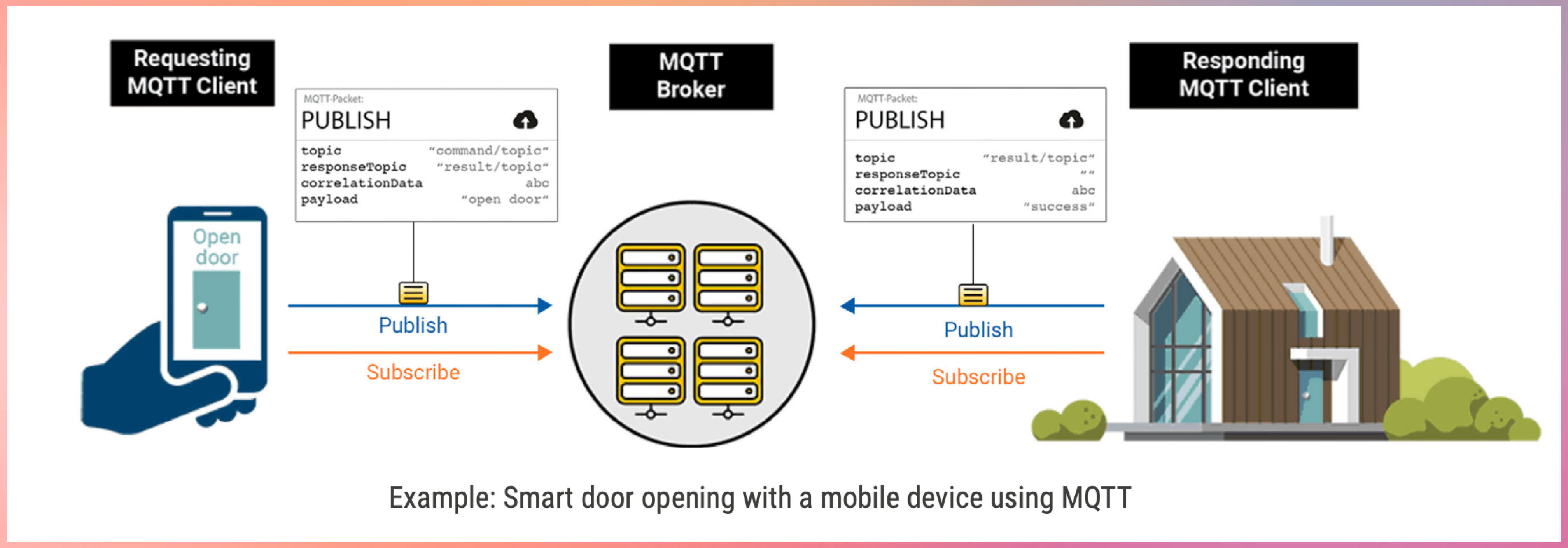

MQTT - Message Queuing Telemetry Transport

MQTT is one of the most widely used messaging protocols in the Internet of Things (IoT).

It was originally developed by IBM in 1999 and later standardized by OASIS. MQTT became popular in IoT because it is simple, lightweight, and designed for unreliable networks.

MQTT works well on:

- Low bandwidth networks

- High latency connections

- Intermittent or unreliable connectivity

Unlike HTTP, MQTT uses a binary message format, making it far more efficient for constrained devices such as sensors and embedded systems.

Why MQTT Exists

Traditional request–response protocols like HTTP are inefficient for IoT devices.

MQTT was designed to:

- Minimize network usage

- Reduce device CPU and memory consumption

- Support asynchronous, event-driven communication

Work reliably even when devices disconnect frequently.

Core MQTT Concepts

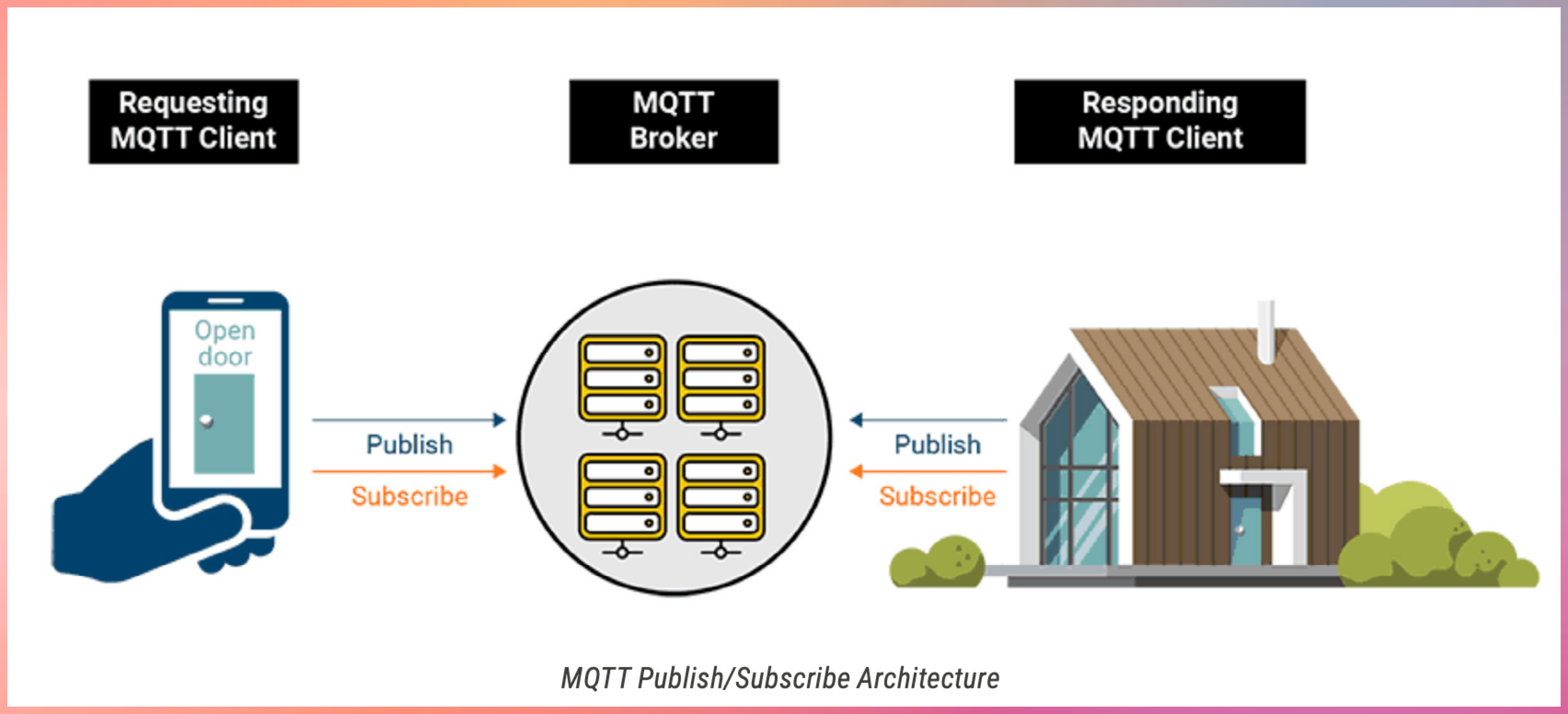

- Publish–Subscribe Model

- MQTT uses a publish–subscribe architecture.

- Devices publish messages to a broker

- Devices subscribe to topics they are interested in

- The broker routes messages to matching subscribers

- Devices never communicate directly with each other.

MQTT Components

MQTT Broker

- The broker is the central message hub.

- Think of it like a post office:

- Receives messages from publishers

- Filters messages by topic

- Delivers messages to subscribers

Common brokers:

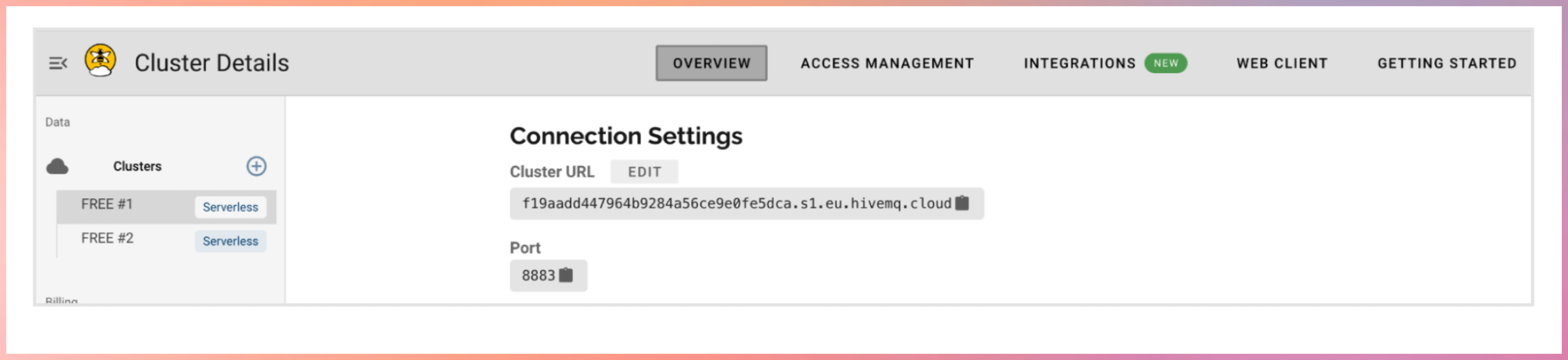

- Open source: Mosquitto

- Commercial: HiveMQ

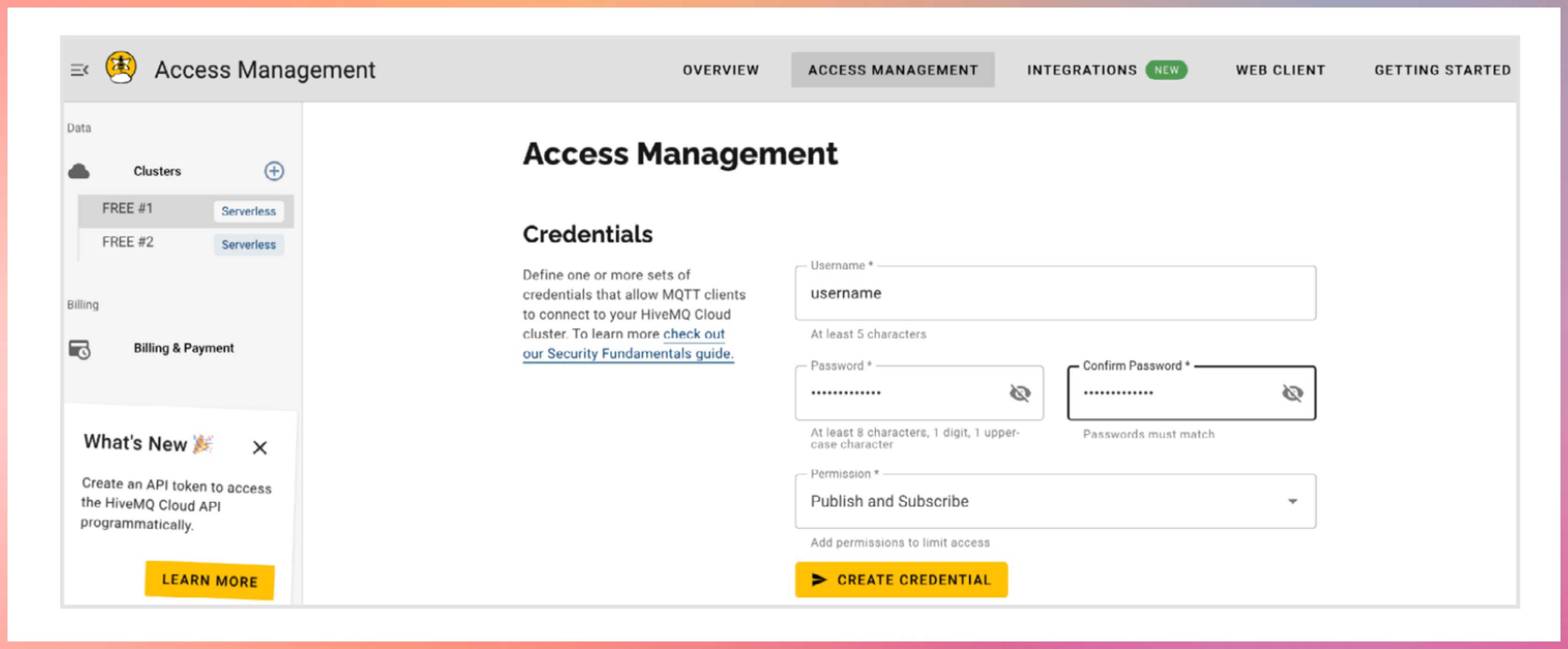

Register with hivemq cloud

Publishers

Devices that send data

Example:

- Temperature sensor publishing readings

- Garage door device publishing open or close status

Subscribers

Devices that receive data

Example:

- Mobile app receiving temperature updates

- Backend system monitoring device health

Topics

Topics are hierarchical strings used to route messages.

Example:

home/livingroom/temperature

- Publishers send messages to a topic

- Subscribers subscribe to topics of interest

- The broker matches topics and delivers messages

Topic Wildcards

MQTT supports topic wildcards for flexible subscriptions.

Single-level wildcard

- Matches exactly one level

Example:

home/+/temperature

Multi-level wildcard

Matches all remaining levels

Example:

home/#

Key Features of MQTT

- Lightweight and Efficient

- Small packet size

- Minimal protocol overhead

- Ideal for constrained devices

- Bidirectional Communication

- Devices can both publish and subscribe

- Enables real-time updates and control

- Highly Scalable

- Supports thousands to millions of devices

- Widely used in large IoT and IIoT deployments

- Configurable Reliability

- Supports different Quality of Service levels

- Lets you trade reliability for performance

- Session Persistence and Buffering

- Brokers can store messages when clients disconnect

- Messages are delivered when clients reconnect

- Security Support

- MQTT itself has no built-in security

- Security is added using:

- TLS encryption

- Client authentication

- Access control at the broker

graph LR

B[MQTT Broker]

CD1[Client Device]

CD2[Client Device]

CD3[Client Device]

CD4[Client Device]

CD5[Client Device]

CD1 -->|Topic 2| B

CD1 -->|Topic 1| B

CD2 -->|Topic 2| B

B -->|Topic 2| CD3

B -->|Topic 1

Topic 3| CD4

B -->|Topic 3| CD5

Quality of Service (QoS)

MQTT defines three QoS levels for message delivery. QoS is coordinated by the broker.

QoS 0 – At most once

- No acknowledgment

- Messages may be lost

- Lowest latency

- Use when message loss is acceptable

- Example: Temperature sensor every 2 seconds. High volume of data.

QoS 1 – At least once

- Message delivery is acknowledged

- Messages may be duplicated

- Commonly used in IoT

- Use when Message loss is unacceptable and duplicate messages can be handled

- Deduplication handled by message id.

- Example: Smart meter readings. Door open/close.

QoS 2 – Exactly once

- Guarantees single delivery

- Highest overhead

- Increased latency

- Use only when message loss and duplication are both unacceptable.

- Example: control commands, critical alerts, factory machine shutdown.

Higher QoS levels consume more network and compute resources.

Pub QoS 1, Sub QoS 0 → delivered as QoS 0

Pub QoS 2, Sub QoS 1 → delivered as QoS 1

Pub QoS 0, Sub QoS 2 → delivered as QoS 0

Message Persistence

Message persistence ensures messages are not lost when clients disconnect.

Non-persistent (Default)

- Messages are not stored

- Lost if subscriber is offline

- Suitable for non-critical data

Queued Persistent

- Broker stores messages for offline clients

- Messages delivered when client reconnects

Similar to: Emails waiting on a server until you connect

Persistent with Acknowledgment

- Messages stored until acknowledged

- Messages resent until confirmation

Used when: Guaranteed processing is required

Persistent Session Stores

When persistence is enabled, brokers may store:

- Client ID

- Subscription list

- Unacknowledged QoS messages

- Queued messages

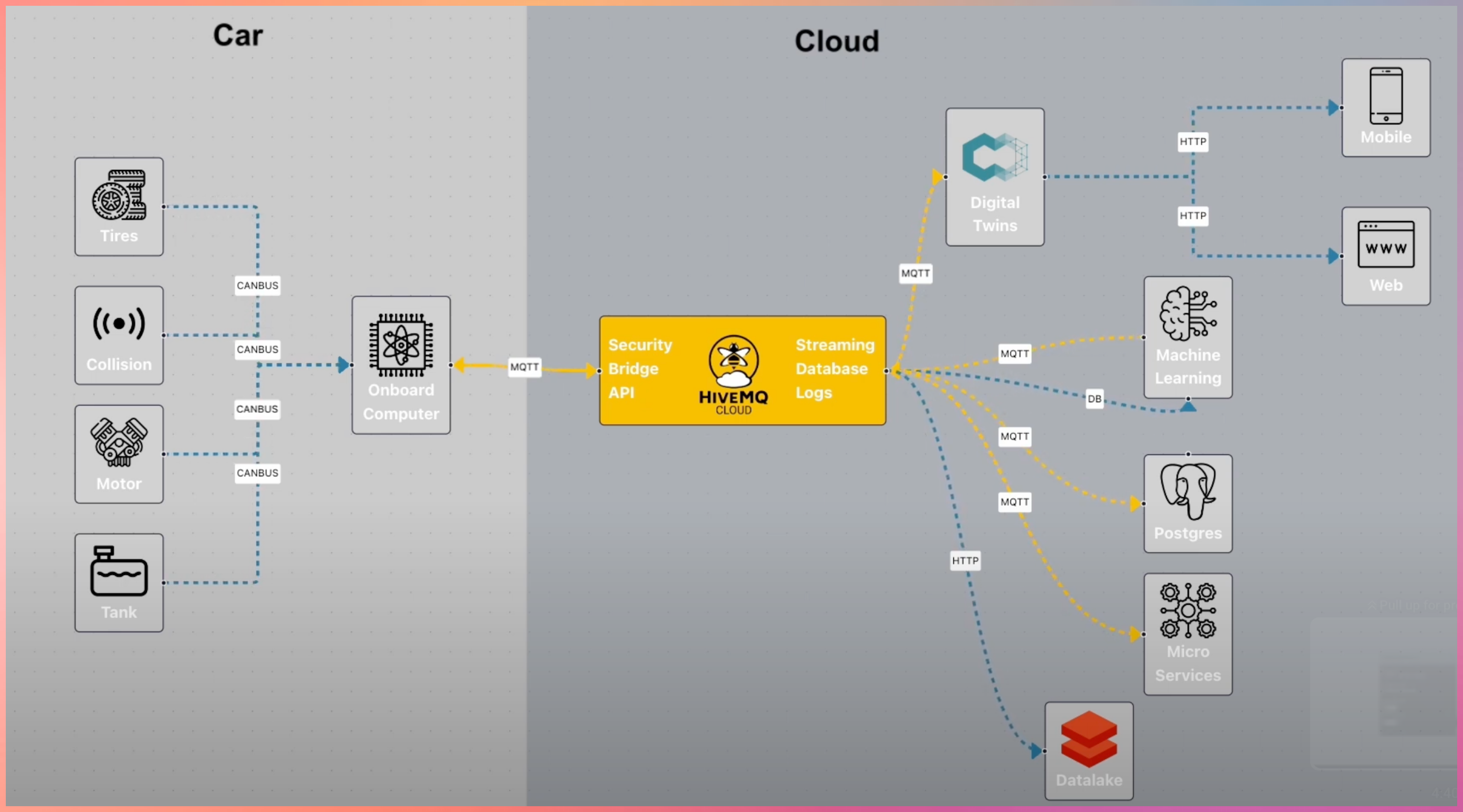

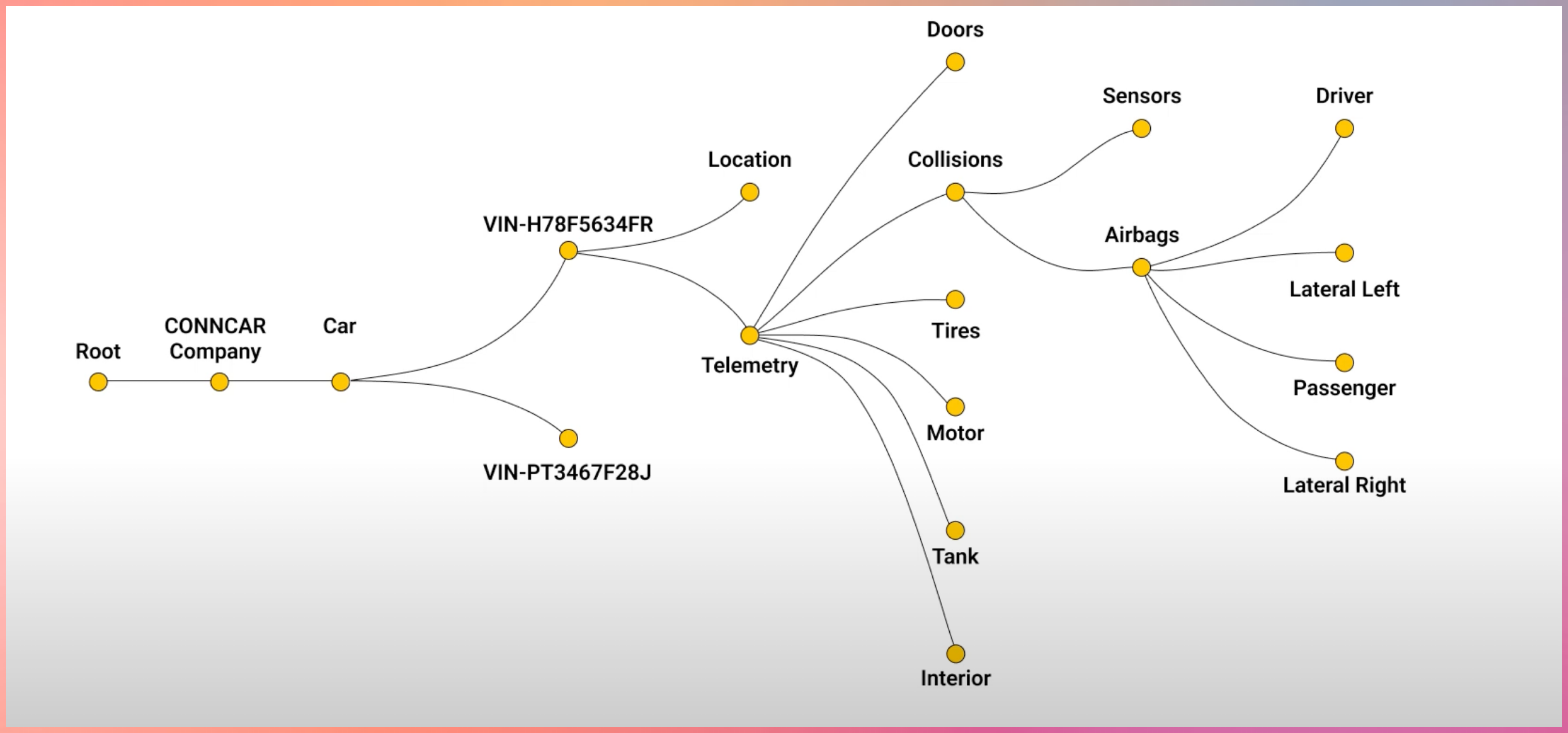

CONN Car Company

Vehicles are shifting from hardware to Software Defined Vehicles. (EVs like Tesla)

MQTT is used for:

- Telemetry streaming

- Remote diagnostics

- Over-the-air updates

- Feature enablement

EV companies use MQTT to connect vehicles, cloud systems, and mobile apps reliably.

MQTT doesn’t stop here

MQTT integrates with:

- Cloud platforms

- Data pipelines

- Streaming systems

- Analytics and monitoring tools

Source YouTube Links

(https://www.youtube.com/watch?v=brUsw_H9Gq8)

(https://www.youtube.com/watch?v=k103_LhF05w)

Advanced Learning about Brokers

https://www.hivemq.com/blog/mqtt-brokers-beginners-guide/

Download the Open Source Broker to learn more https://mosquitto.org/

#mqtt #http #broker #publisher #subscriber

1: http://hivemq.com

[Avg. reading time: 8 minutes]

JSON

JSON (JavaScript Object Notation) is a lightweight, text-based data format that’s easy to read for both humans and machines. It was derived from JavaScript but is now language-independent, making it one of the most popular formats for data exchange between applications. Key Concepts:

What is JSON Used For?

- Storing configuration settings

- Exchanging data between web servers and browsers

- APIs (Application Programming Interfaces)

- Storing structured data in files or databases

- Mobile app data storage

JSON Data Types:

Strings: Text wrapped in double quotes

{"name": "Rachel Green"}

Numbers: Integer or floating-point

{"age": 27, "height": 5.5}

Booleans: true or false

{"isStudent": true}

null: Represents no value

{"middleName": null}

Arrays: Ordered lists of values

{

"hobbies": ["shopping", "singing", "swimming"]

}

Objects: Collections of key-value pairs

{

"address": {

"street": "123 Main St",

"city": "NYC",

"zipCode": "10001"

}

}

Important Rules:

- All property names must be in double quotes

- Values can be strings, numbers, objects, arrays, booleans, or null

- Commas separate elements in arrays and properties in objects

- No trailing commas allowed

- No comments allowed in JSON

- Must use UTF-8 encoding

Example

{

"studentInfo": {

"firstName": "Monica",

"lastName": "Geller",

"age": 22,

"isEnrolled": true,

"courses": [

{

"name": "Web Development",

"code": "CS101",

"grade": 95.5

},

{

"name": "Database Design",

"code": "CS102",

"grade": 88.0

}

],

"contact": {

"email": "monica.g@friends.com",

"phone": null,

"address": {

"street": "456 College Ave",

"city": "Columbia",

"state": "NY",

"zipCode": "13357"

}

}

}

}

Dont’s with JSON

- Using single quotes instead of double quotes

- Not enclosing property names in quotes

- Adding trailing commas

- Missing closing brackets or braces

- Using undefined or functions (not allowed in JSON)

- Adding comments (not supported in JSON)

Best Practices

- Always validate JSON using a JSON validator tool

- Pay attention to proper nesting of objects and arrays

- Ensure all opening brackets/braces have matching closing ones

- Check for proper use of commas

camelCase (e.g., firstName):

- Most popular in JavaScript/JSON

- Easy to read and type

- Matches JavaScript convention

Example:

{

"firstName": "John",

"lastLoginDate": "2024-12-20",

"phoneNumber": "555-0123"

}

snake_case (underscores, e.g., first_name):

- Popular in Python and SQL

- Very readable

- Clear word separation

Example:

{

"first_name": "John",

"last_login_date": "2024-12-20",

"phone_number": "555-0123"

}

kebab-case (hyphens, e.g., first-name):

- Common in URLs and HTML attributes

- NOT recommended for JSON

- Can cause issues because hyphen is also the subtraction operator

- Requires bracket notation to access in JavaScript

Example of why it’s problematic:

// This won't work

data.first-name // JavaScript interprets as data.first minus name

// Must use bracket notation

data["first-name"] // Works but less convenient

[Avg. reading time: 9 minutes]

CBOR (Concise Binary Object Representation)

CBOR is a compact binary data format designed for efficiency, speed, and low overhead. It keeps JSON’s simplicity while delivering 30–50% smaller payloads and faster serialization, making it ideal for IoT, embedded systems, and high-throughput APIs.

https://cbor.dev

Why CBOR

JSON is human-friendly but wasteful for machines.

CBOR is Binary

- Binary encoding instead of text

- Smaller payloads

- Faster parsing

- Native binary support

- Better fit for constrained environments

Use CBOR when:

- Bandwidth is expensive

- Latency matters

- Devices are constrained

- Message rates are high

Key Features

Binary Format

- Compact payloads

- Lower bandwidth usage

- Faster transmission

Self-Describing

- Encodes type information directly

- No external schema required to decode

Schema-Less (Schema Optional)

- Works like JSON

- Supports validation using CDDL (Consise Data Definition Language)

Fast Serialization & Parsing

- No expensive string parsing

- Lower CPU overhead

Extensible

- Supports semantic tags for:

- Date / Time

- URIs

- Application-specific meanings

Data Types & Structure

CBOR natively supports JSON-like data structures:

Primitive Types:

- Integers (positive, negative)

- Byte strings (bstr)

- text strings (tstr)

- Floating-point numbers (16,32,64 bit)

- Booleans (true, false)

- null, and undefined values.

Composite Types:

- Arrays (ordered lists)

- Maps (key-value pairs, similar to JSON objects)

Semantic Tags:

- Optional tags to add meaning (e.g., Tag 0 for date/time strings, Tag 32 for URIs).

Example: CBOR vs. JSON

JSON Object

{

"id": 123,

"name": "Temperature Sensor",

"value": 25.5,

"active": true

}

CBOR to/from JSON

CBOR Playground

CBOR Encoding (Hex Representation)

B9 0004 # map(4)

62 # text(2)

6964 # "id"

18 7B # unsigned(123)

64 # text(4)

6E616D65 # "name"

72 # text(18)

54656D70657261747572652053656E736F72 # "Temperature Sensor"

65 # text(5)

76616C7565 # "value"

FB 4039800000000000 # primitive(4627870829588250624)

66 # text(6)

616374697665 # "active"

F5 # primitive(21)

Size Comparison:

- JSON: ~70 bytes.

- CBOR: ~45 bytes (35% smaller)

| Feature | CBOR | JSON/XML |

|---|---|---|

| Payload Size | Compact binary encoding (~30-50% smaller). | Verbose text-based encoding |

| Parsing Speed | Faster (no string parsing). | Slower (text parsing required). |

| Data Types | Rich (supports bytes, floats, tags). | Limited (no native byte strings). |

| Schema Flexibility | Optional schemas (CDDL). | Often requires external schemas. |

| Human Readability | Requires tools to decode. | Easily readable. |

Limitations

Human-Unreadable: Requires tools (e.g., CBOR Playground) to decode.

Schema Validation: While optional, validation requires external tools like CDDL (Concise Data Definition Language).

When to Use CBOR

-

Low-bandwidth networks (e.g., IoT over LoRaWAN or NB-IoT).

-

High-performance systems needing fast serialization.

-

Interoperability between devices and web services.

Demo Code

git clone https://github.com/gchandra10/python_cbor_examples

CBOR + MQTT = Perfect Match

CBOR is ideal for MQTT payloads

Demonstrate how cbor can be used with mqtt.

[Avg. reading time: 4 minutes]

XML

XML (eXtensible Markup Language) is moderately popular in IoT.

With JSON gaining popularity, XML is still used in Legacy systems and regulated environments such as Govt/Military systems.

It uses XSD (Extended Schema Definition) to enforce strict data validation, ensuring integrity in critical applications like healthcare.

Legacy systesm use SOAP-based web services (newer ones use REST API) often use XML, rquiring IoT devices to adopt XML for compatibility.

<sensorData>

<deviceId>TEMP_SENSOR_01</deviceId>

<location>living_room</location>

<reading>

<temperature>23.5</temperature>

<unit>Celsius</unit>

<timestamp>2025-01-29T14:30:00</timestamp>

</reading>

</sensorData>

Limitations of XML in IoT

- Verbosity: Larger payloads increase bandwidth and storage costs.

- Processing Overhead: Parsing XML can strain low-power IoT devices.

- Modern Alternatives: JSON and binary formats (e.g., Protocol Buffers) are more efficient for most IoT use cases.

Here’s the XML vs. JSON Trade-offs comparison formatted as a markdown table:

| Factor | XML | JSON |

|---|---|---|

| Payload Size | Verbose (larger files) | Compact (better for low-bandwidth IoT) |

| Parsing Speed | Slower (complex structure) | Faster (lightweight parsing) |

| Validation | Mature (XSD) | Growing (JSON Schema) |

| Adoption in New Projects | Rare (outside legacy/regulated use cases) | Dominant (preferred for new IoT systems) |

[Avg. reading time: 6 minutes]

TCP & UDP

- Transmission Control Protocol

- User Datagram Protocol

TCP and UDP are transport protocols. Their only job is to decide how data moves across the network.

Common IoT problems

- Sensors generate data continuously

- Networks are unreliable

- Devices are constrained

- Some data losses are acceptable and some are not.

UDP

- Sends data without confirmation

- No retries

- No ordering

- No connection

- Very low overhead

UDP Usecases in IOT

- Battery powered devices

- High frequency telemetry

- Small payloads

- Occasional loss is acceptable

- Speed matters more than accuracy

Typical IoT usage

- CoAP

- Device discovery

- Heartbeats

- Periodic measurements of Environmental sampling

Example

Smart street lighting

- Each lamp sends a heartbeat every 5 to 10 seconds

- Payload: device_id, status, battery, signal strength

- If ‘n’ heartbeats are missed, mark lamp as offline

- Losing one packet changes nothing.

Vehicle Telematics

- Fleet vehicles send location and health pings

- One ping every few seconds

- Next ping overrides the previous

TCP

- Confirms delivery

- Retries lost data

- Preserves order

- Maintains a connection

- Higher overhead

TCP use cases in IoT

- Data must not be lost

- Order matters

- Sessions last minutes or hours

Typical IoT usage

- MQTT

- HTTP

- HTTPS

- TLS secured pipelines

With MQTT

- Ordered messages

- Delivery guarantees using QoS

- Persistent sessions

- Broker side buffering

- Fan out to many subscribers

UDP vs TCP

| Question | UDP | TCP |

|---|---|---|

| Is delivery guaranteed | No | Yes |

| Is ordering preserved | No | Yes |

| Is it lightweight | Yes | No |

| Does MQTT use it | No | Yes |

| Does CoAP use it | Yes | No |

| Best for battery devices | Yes | Sometimes |

| Best for critical data | No | Yes |

SENSOR

|

|

-----------------

| |

UDP Path TCP Path

| |

No confirmation Confirmed delivery

No retry Retry on failure

Possible loss Ordered messages

| |

CoAP MQTT Broker

|

Persistent sessions

|

Cloud Applications

````<span id='footer-class'>Ver 6.0.5</span>

<footer id="last-change">Last change: 2026-02-05</footer>````[Avg. reading time: 8 minutes]

MessagePack

A compact binary data interchange format

What is MessagePack

MessagePack is an efficient binary serialization format designed for fast and compact data exchange between systems.

Core properties

- Compact compared to text formats like JSON

- Fast serialization and deserialization

- Cross-language support across many ecosystems

- Flexible data model with optional extensions

Why MessagePack

MessagePack solves a very specific problem:

- JSON is easy to read but inefficient on the wire

- IoT and distributed systems care about bytes, latency, and CPU

- MessagePack keeps JSON-like simplicity but removes text overhead

In short, JSON Data model with Binary efficiency.

Key Use Cases

- IoT telemetry and device data

- Edge gateways aggregating high-frequency events

- Microservice-to-microservice communication

- Caching layers like Redis and Memcached

- Distributed systems logging and checkpoints

MessagePack vs JSON

- Binary and compact

- Faster to parse

- Smaller payloads for most data

- Not human-readable

- Debugging requires tooling

MessagePack vs CBOR

- MessagePack is simpler and lighter

- CBOR supports semantic tags like datetime and URI

- CBOR supports deterministic encoding for hashing and signatures

- Size differences are workload-dependent, not guaranteed

Comparison with Similar Formats

| Feature | MessagePack | JSON | CBOR |

|---|---|---|---|

| Encoding | Binary | Text | Binary |

| Human-readable | No | Yes | No |

| Data Size | Small (varies) | Large | Small (varies) |

| Schema Required | No | No | No |

| Standardization | Community | RFC 8259 | RFC 8949 |

| Binary Data Support | Native | Base64 | Native |

| Semantic Tags | No | No | Yes |

| Deterministic Encoding | No | No | Yes |

Key Differences:

- vs JSON: 20-30% smaller payloads, faster parsing, but not human-readable

- vs CBOR: More compact for simple types, CBOR has better semantic tagging

Basic Operations

packb()converts Python objects to MessagePack bytesunpackb()converts MessagePack bytes back to Python objects

Python Example

git clone https://github.com/gchandra10/python_messagepack_examples.git

MessagePack in IoT and Edge Systems

- Commonly used in edge gateways and ingestion pipelines

- Efficient for short, frequent telemetry messages

- Suitable for MQTT payloads where the broker is payload-agnostic

- Rarely used directly in regulated firmware layers

Important:

- MQTT does not care about payload format

- MessagePack is an application-layer choice, not a protocol requirement

Summary

When to Choose MessagePack

- Bandwidth or memory is constrained

- JSON is too verbose

- Binary data is common

- Speed matters more than readability

- Schema flexibility is acceptable

What MessagePack Does Not Do

- No schema enforcement

- No backward compatibility guarantees

- No semantic meaning for fields

- No built-in validation

- No deterministic encoding

Devices like AppleWatch, Fitbit use Protocol Buffers for strict schema FDA regulated enforcement.

[Avg. reading time: 12 minutes]

Protocol Buffers

What are Protocol Buffers

- A method to serialize structured data into binary format

- Created by Google

- Its like JSON, but smaller and faster.

- Protocol Buffers are more commonly used in industrial IoT scenarios.

Why Protobuf is great for IoT

- Smaller size: Uses binary format instead of text, saving bandwidth

- Faster processing: Binary format means less CPU usage on IoT devices

- Strict schema: Helps catch errors early

- Language neutral: Works across different programming languages

- Great for limited devices: Uses less memory and battery power

- Extensibility: Add new fields to your message definitions without breaking existing code.

Industrial Use Cases

- Bridge structural sensors (vibration, stress)

- Factory equipment monitors

- Power grid sensors

- Oil/gas pipeline monitors

- Wind turbine telemetry

- Industrial HVAC systems

Why Industries prefer Protobuf:

- High data volume (thousands of readings per second)

- Need for efficient bandwidth usage

- Complex data structures

- Multiple systems need to understand the data

- Long-term storage requirements

- Cross-platform compatibility needs

graph LR

subgraph Bridge["Bridge Infrastructure"]

S1[Vibration Sensor] --> GW

S2[Strain Gauge] --> GW

S3[Temperature Sensor] --> GW

subgraph Gateway["Linux Gateway (Solar)"]

GW[Edge Gateway]

DB[(Local Storage)]

GW --> DB

end

end

subgraph Communication["Communication Methods"]

GW --> |4G/LTE| Cloud

GW --> |LoRaWAN| Cloud

GW --> |Satellite| Cloud

end

Cloud[Cloud Server] --> DA[Data Analysis]

style Bridge fill:#87CEEB,stroke:#333,stroke-width:2px,color:black

style Gateway fill:#90EE90,stroke:#333,stroke-width:2px,color:red

style Communication fill:#FFA500,stroke:#333,stroke-width:2px,color:black

style Cloud fill:#4169E1,stroke:#333,stroke-width:2px,color:white

style DA fill:#4169E1,stroke:#333,stroke-width:2px,color:white

style GW fill:#000000,stroke:#333,stroke-width:2px,color:white

style DB fill:#800020,stroke:#333,stroke-width:2px,color:white

classDef sensor fill:#00CED1,stroke:#333,stroke-width:1px,color:black

class S1,S2,S3 sensor

Consumer IoT devices (in general)

- Use simpler formats (JSON, proprietary)

- Have lower data volumes

- Work within closed ecosystems (Google Home, Apple HomeKit)

- Don’t need the optimization Protobuf provides

Data Types in Protobufs

Scalar Types:

int32, int64, uint32, uint64, sint32, sint64, fixed32, fixed64, sfixed32, sfixed64 float, double, bool, string, bytes

Composite Types:

- message: Defines a structured collection of other fields.

- enum: Defines a set of named integer constants.

Collections:

- repeated: Allows you to define a list of values of the same type. Like Array.

Steps involved in creating a Proto Buf data file.

Step 1: Define the Data Structure of your data file as .proto text file.

Ex: my_data.proto

syntax = "proto3";

message MyData {

int32 id = 1;

string name = 2;

float value = 3;

}

Step 2: Compile the .proto file to Python Class (.pb) or Java Class (.java) using protoc library.

protoc --python_out=. my_data.proto

Generates my_data_pb2.py

Step 3: Use the Generated Python Class file and use it to store data.

Note: Remember protoc –version should be same or closer as protobuf minor version number from pypi library.

In my setup protoc –version = 29.3, pypi protobuf = 5.29.2 Minor version of protobuf is 29.2 which is closer to 29.3

See example.

Demo Script

git clone https://github.com/gchandra10/python_protobuf_demo

flowchart LR

subgraph Sensor["Temperature/Humidity Sensor"]

S1[DHT22/BME280]

end

subgraph MCU["Microcontroller"]

M1[ESP32/Arduino]

end

subgraph Gateway["Gateway/Edge Device"]

G1[Raspberry Pi/\nIntel NUC]

end

subgraph Cloud["Cloud Server"]

C1[AWS/Azure/GCP]

end

S1 -->|Raw Data 23.5°C, 45%| M1

M1 -->|"JSON over MQTT {temp: 23.5,humidity: 45}"| G1

G1 -->|Protocol Buffers\nover HTTPS| C1

[Avg. reading time: 2 minutes]

HTTP Basics

HTTP (HyperText Transfer Protocol) is the foundation of data communication on the web, used to transfer data (such as HTML files and images).

GET - Navigate to a URL or click a link in real life.

POST - Submit a form on a website, like a username and password.

Popular HTTP Status Codes

200 Series (Success): 200 OK, 201 Created.

300 Series (Redirection): 301 Moved Permanently, 302 Found.

400 Series (Client Error): 400 Bad Request, 401 Unauthorized, 404 Not Found.

500 Series (Server Error): 500 Internal Server Error, 503 Service Unavailable.

We already learnt about Monolithic and Microservices.

#http #status #monolithic #microservices

[Avg. reading time: 9 minutes]

Statefulness

The server stores information about the client’s current session in a stateful system. This is common in traditional web applications. Here’s what characterizes a stateful system:

Session Memory: The server remembers past interactions and may store session data like user authentication, preferences, and other activities.

Server Dependency: Since the server holds session data, the same server usually handles subsequent requests from the same client. This is important for consistency.

Resource Intensive: Maintaining state can be resource-intensive, as the server needs to manage and store session data for each client.

Example: A web application where a user logs in, and the server keeps track of their authentication status and interactions until they log out.

sequenceDiagram

participant C as Client

participant LB as Load Balancer

participant S1 as Server 1

participant S2 as Server 2

Note over C,S2: Initial Session Establishment

C->>LB: Initial Request

LB->>S1: Forward Request

S1-->>LB: Response (Session ID)

LB-->>C: Response (Session ID)

rect rgb(255, 255, 200)

Note over C,S2: Sticky Session Established

end

Note over C,S2: Session Continuation

C->>LB: Subsequent Request (with Session ID)

LB->>S1: Forward Request (based on Session ID)

S1-->>LB: Response (Data)

LB-->>C: Response (Data)

rect rgb(255, 255, 200)

Note over C,S2: Session Continues on Server 1

end

Note over C,S2: Session Termination

C->>LB: Logout Request

LB->>S1: Forward Logout Request

S1-->>LB: Confirmation

LB-->>C: Confirmation

rect rgb(255, 255, 200)

Note over C,S2: Session Ended

end

rect rgb(255, 255, 200)

Note right of S2: Server 2 remains unused due to stickiness

end

Stickiness (Sticky Sessions)

Stickiness or sticky sessions are used in stateful systems, particularly in load-balanced environments. It ensures that requests from a particular client are directed to the same server instance. This is important when:

Session Data: The server needs to maintain session data (like login status), and it’s stored locally on a specific server instance.

Load Balancers: In a load-balanced environment, without stickiness, a client’s requests could be routed to different servers, which might not have the client’s session data.

Trade-off: While it helps maintain session continuity, it can reduce the load balancing efficiency and might lead to uneven server load.

Methods of Implementing Stickiness

Cookie-Based Stickiness: The most common method, where the load balancer uses a special cookie to track the server assigned to a client.

IP-Based Stickiness: The load balancer routes requests based on the client’s IP address, sending requests from the same IP to the same server.

Custom Header or Parameter: Some load balancers can use custom headers or URL parameters to track and maintain session stickiness.

[Avg. reading time: 7 minutes]

Statelessness

In a stateless system, each request from the client must contain all the information the server needs to fulfill that request. The server does not store any state of the client’s session. This is a crucial principle of RESTful APIs. Characteristics include:

No Session Memory: The server remembers nothing about the user once the transaction ends. Each request is independent.

Scalability: Stateless systems are generally more scalable because the server doesn’t need to maintain session information. Any server can handle any request.

Simplicity and Reliability: The stateless nature makes the system simpler and more reliable, as there’s less information to manage and synchronize across systems.

Example: An API where each request contains an authentication token and all necessary data, allowing any server instance to handle any request.

sequenceDiagram

participant C as Client

participant LB as Load Balancer

participant S1 as Server 1

participant S2 as Server 2

C->>LB: Request 1

LB->>S1: Forward Request 1

S1-->>LB: Response 1

LB-->>C: Response 1

C->>LB: Request 2

LB->>S2: Forward Request 2

S2-->>LB: Response 2

LB-->>C: Response 2

rect rgb(255, 255, 200)

Note over C,S2: Each request is independent

end

In this diagram:

Request 1: The client sends a request to the load balancer.

Load Balancer to Server 1: The load balancer forwards Request 1 to Server 1.

Response from Server 1: Server 1 processes the request and sends a response back to the client.

Request 2: The client sends another request to the load balancer.

Load Balancer to Server 2: This time, the load balancer forwards Request 2 to Server 2.

Response from Server 2: Server 2 processes the request and responds to the client.

Statelessness: Each request is independent and does not rely on previous interactions. Different servers can handle other requests without needing a shared session state.

Token-Based Authentication

Common in stateless architectures, this method involves passing a token for authentication with each request instead of relying on server-stored session data. JWT (JSON Web Tokens) is a popular example.

[Avg. reading time: 9 minutes]

REST API

REpresentational State Transfer is a software architectural style developers apply to web APIs.

REST APIs provide simple, uniform interfaces because they can be used to make data, content, algorithms, media, and other digital resources available through web URLs. Essentially, REST APIs are the most common APIs used across the web today.

Use of a uniform interface (UI)

HTTP Methods

GET: This method allows the server to find the data you requested and send it back to you.

POST: This method permits the server to create a new entry in the database.

PUT: If you perform the ‘PUT’ request, the server will update an entry in the database.

DELETE: This method allows the server to delete an entry in the database.

Sample REST API

https://api.zippopotam.us/us/08028

http://api.tvmaze.com/search/shows?q=friends

https://jsonplaceholder.typicode.com/posts

https://jsonplaceholder.typicode.com/posts/1

https://jsonplaceholder.typicode.com/posts/1/comments

https://reqres.in/api/users?page=2

https://reqres.in/api/users/2

More examples

http://universities.hipolabs.com/search?country=United+States

https://itunes.apple.com/search?term=pop&limit=1000

https://www.boredapi.com/api/activity

https://techcrunch.com/wp-json/wp/v2/posts?per_page=100&context=embed

CURL

Install curl (Client URL)

curl is a CLI application available for all OS.

brew install curl

Usage

curl https://api.zippopotam.us/us/08028

curl https://api.zippopotam.us/us/08028 -o zipdata.json

Browser based

VS Code based

Using Python

git clone https://github.com/gchandra10/python_read_restapi

Summary

Definition: REST (Representational State Transfer) API is a set of guidelines for building web services. A RESTful API is an API that adheres to these guidelines and allows for interaction with RESTful web services.

How It Works: REST uses standard HTTP methods like GET, POST, PUT, DELETE, etc. It is stateless, meaning each request from a client to a server must contain all the information needed to understand and complete the request.

Data Format: REST APIs typically exchange data in JSON or XML format.

Purpose: REST APIs are designed to be a simple and standardized way for systems to communicate over the web. They enable the backend services to communicate with front-end applications (like SPAs) or other services.

Use Cases: REST APIs are used in web services, mobile applications, and IoT (Internet of Things) applications for various purposes like fetching data, sending commands, and more.

[Avg. reading time: 7 minutes]

CoAP

Introduction

CoAP stands for Constrained Application Protocol, developed by the IETF. (Internet Engineering Task Force).

Constrained Environments

- Energy constraints.

- Memory limitations.

- Processing capibility.

- Unattended network operation.

- Unreliable networks.

- Higher Latency in communication.

Popular Examples:

- Small embedded devices.

- Runs on small capacity batteries.

- Minimum RAM/ROM.

Products

- Window / Door sensors.

- Healthcare Wearables.

Key Features

Request-Response Model: CoAP operates on a request-response communication model, much like its counterpart HTTP. In this model, a client sends a CoAP request to a server, and the server responds with the requested data or action. CoAP’s request methods mirror those of HTTP, including GET, PUT, POST, and DELETE.

CoAP is like HTTP, but runs on UDP for better efficiency. HTTP is TCP-based, while CoAP targets smaller data exchanges.

Best used for applications wanting to read sensor data effectively.

Multicast Support: CoAP incorporates support for multicast communication, enabling a single CoAP message to be sent to multiple recipients simultaneously. Multicast communication reduces network traffic and efficiently disseminates data, a feature that aligns well with resource-constrained environments.

Layers in CoAP

-

Application Layer

-

Request / Response Layer

-

Message Layer

-

UDP Layer

-

Message & UDP are Lower Layers

-

Application and Request/Response are Upper Layers

Message Types

- Confirmable Messaging (CON)

- Non Confirmable Messaging (NON)

- Acknowledgeable Messaging (ACK)

- Reset Messaging (RST)

Features of CoAP

- Lightweight, asynchoronous and efficient with a 4-byte header.

- Runs connectionless on UDP, allowing for quick message exchanges.

- Easily integrates with HTTP for seamless web connectivity.

- Supports common methods: GET, POST, PUT, DELETE, dealing with these aynchronously.

| Feature | MQTT | CoAP |

|---|---|---|

| Base Protocol | TCP | UDP |

| Security | SSL/TLS | DTLS (less common) |

| Reliability | High | Medium |

| Power Usage | Higher | Lower |

| Scalability | High | Medium |

| Message Model | Publish-Subscribe | Request-Response |

| Multicast | Limited | Supported |

| QoS Levels | Yes (3 levels) | No (basic) |

| Latency | Medium | Low |

| Communication Pattern | One-to-Many | One-to-One, One-to-Many |

| Standardization | OASIS Standard | IETF Standard |

| Complexity | Higher due to Broker Setup | Lower, simpler implementation |

| Use Cases | Real-time Data Transfer, Reliable Communication | Low-Power, Low-Bandwidth Applications |

Demo

git clone https://github.com/gchandra10/python_coap_examples.git

[Avg. reading time: 17 minutes]

Python Environment

Prerequisites

For Windows Users

- Install GitBash

- Download and install from:

https://gitforwindows.org/ - This provides a Unix-like terminal environment

- Download and install from:

Step 1: Install Poetry

- Visit the official installer page:

https://python-poetry.org/docs/main/ - Follow the installation instructions for your operating system

- Verify your installation:

poetry --version

Step 2: Configure Poetry Shell

Add the shell plugin to enable virtual environment management:

poetry self add poetry-plugin-shell

Step 3: Create Your First Project

- Create a new project:

poetry new helloworld

This creates a new directory with the following structure:

helloworld/

├── pyproject.toml

├── README.md

├── helloworld/

│ └── __init__.py

└── tests/

└── __init__.py

- Navigate to your project directory

cd helloworld

Windows Users

Step 4: Working with Virtual Environments

- Create and activate a virtual environment:

poetry shell

- Switch Python Interpreter to Virtual Environment

poetry env info

poetry env info -e

poetry env <paste the path>

Or Use this one line.

poetry env use $(poetry env info -e)

- Add project dependencies:

poetry add pandas

- Create a main.py under hellworld (subfolder)

main.py

print("hello world")

Step 5: Managing Your Project

- View all installed dependencies:

poetry show

- Update dependencies:

poetry update

- Remove a dependency:

poetry remove package-name

- Run program

poetry run python main.py

- Exit Virtual Environment

exit

Key Benefits for Beginners

- Simplified Environment Management

- Poetry automatically creates and manages virtual environments

- No need to manually manage pip and virtualenv

- Clear Dependency Specification

- All dependencies are listed in one file (pyproject.toml)

- Dependencies are automatically resolved to avoid conflicts

- Project Isolation

- Each project has its own dependencies

- Prevents conflicts between different projects

- Easy Distribution

- Package your project with a single command:

poetry build

Publish to PyPI when ready:

poetry publish

Best Practices

- Always activate virtual environment before working on project

- Keep pyproject.toml updated with correct dependencies

- Use version constraints wisely

- Commit both pyproject.toml and poetry.lock files to version control

PEP

PEP, or Python Enhancement Proposal, is the official style guide for Python code. It provides conventions and recommendations for writing readable, consistent, and maintainable Python code.

Key Aspects of PEP 8 (Popular ones)

Indentation

- Use 4 spaces per indentation level.

- Avoid tabs; spaces are preferred.

Line Length

- Limit lines to a maximum of 79 characters.

- For docstrings and comments, limit lines to 72 characters.

Blank Lines

- Use blank lines to separate functions, classes, and code blocks inside functions.

- Typically, use two blank lines before defining a class or function.

Imports

- Imports should be on separate lines.

- Group imports into three sections: standard library, third-party libraries, and local application imports.

- Use absolute imports whenever possible.

Naming Conventions

- Use snake_case for function and variable names.

- Use CamelCase for class names.

- Use UPPER_SNAKE_CASE for constants.

- Avoid single-character variable names except for counters or indices.

Whitespace

- Avoid extraneous whitespace inside parentheses, brackets, or braces.

- Use a single space around operators and after commas, but not directly inside function call parentheses.

Comments

- Write comments that are clear, concise, and helpful.

- Use complete sentences and capitalize the first word.

- Use # for inline comments, but avoid them where the code is self-explanatory.

Docstrings

- Use triple quotes (“”“) for multiline docstrings.

- Describe the purpose, arguments, and return values of functions and methods.

Code Layout

- Keep function definitions and calls readable.

- Avoid writing too many nested blocks.

Consistency

Be consistent within a project or module, even if it means diverging slightly from PEP 8, as long as it serves the project better.

Linting

Python linting refers to the process of analyzing Python code for potential errors, stylistic inconsistencies, and programming best practices.

Basically review your code to make sure it follows the PEP 8 standard.

# Incorrect

import sys, os

# Correct

import os

import sys

Ruff : Linter and Code Formatter

Ruff is a fast Python linter, formatter, and code analyzer that checks your Python code for common stylistic and syntactical issues.

Install

poetry add ruff

Verify

ruff –version or –help

Demo

example.py

import os, sys

def greet(name):

print(f"Hello, {name}")

def message(name):

print(f"Hi, {name}")

def calc_sum(a, b): return a+b

greet('World')

greet('Ruff')

poetry run ruff check example.py

poetry run ruff check example.py --fix

poetry run ruff format example.py --check

poetry run ruff format example.py

MyPy : Type Checking Tool

mypy is a static type checker for Python. It checks your code against the type hints you provide, ensuring that the types are consistent throughout the codebase.

It primarily focuses on type correctness—verifying that variables, function arguments, return types, and expressions match the expected types.

Install

poetry add mypy

or

pip install mypy

sample.py

x = 1

x = 1.0

x = True

x = "test"

x = b"test"

print(x)

mypy sample.py

or

poetry run mypy sample.py

````<span id='footer-class'>Ver 6.0.5</span>

<footer id="last-change">Last change: 2026-02-05</footer>````[Avg. reading time: 11 minutes]

GIL (Global Interpreter Lock)

The Global Interpreter Lock (GIL) is a mutex (mutual exclusion) that allows only one thread to hold control of the Python interpreter. This means only one thread can execute Python bytecode at a time.

By design, GIL affects the performance of Python programs by limiting true parallelism on multi-core processors.

To learn more here is an interesting article https://realpython.com/python-gil/

Thread: Independent sequence of instructions within a program.

Parallel & Concurrent Programming

Concurrent Programming

Managing multiple tasks that can start, run, and complete in overlapping time periods.

- Tasks don’t necessarily run at the same exact moment

Like a chef managing multiple dishes in a kitchen - switching between tasks.

Used for: Improved program structure and responsiveness.

Parallel Programming

Multiple tasks or calculations happening simultaneously

- Requires multiple processors/cores.

Like multiple chefs working in different kitchen stations simultaneously

Used for: Improved performance through simultaneous execution.

Types of Concurrency

Thread Based

Pros of Threading:

- Good for I/O-bound tasks (reading files, network operations)

- Shared memory space - easy to share data between threads

- Lightweight compared to processes

Cons of Threading:

- Global Interpreter Lock (GIL) limits true parallelism

- Complex synchronization needed (locks, semaphores)

- Race conditions and deadlocks are common pitfalls

| Keyword | Description |

|---|---|

threading.Thread() | Creates a new thread object |

thread.start() | Starts thread execution |

thread.join() | Waits for thread completion |

threading.Lock() | Creates mutex lock for thread synchronization |

threading.Event() | Event synchronization between threads |

threading.Semaphore() | Limits access to shared resources |

threading.get_ident() | Gets current thread identifier |

Process Based

Pros of Multiprocessing:

- True parallelism (bypasses GIL)

- Better for CPU-intensive tasks

- Process isolation provides better stability

Cons of Multiprocessing:

- Higher memory usage

- Slower process creation

- More complex data sharing between processes

| Keyword | Description |

|---|---|

multiprocessing.Process() | Creates a new process |

process.start() | Starts process execution |

process.join() | Waits for process completion |

multiprocessing.Pool() | Creates a pool of worker processes |

multiprocessing.Queue() | Shared queue for inter-process communication |

multiprocessing.Pipe() | Creates a pipe for process communication |

os.getpid() | Gets current process ID |

Async Programming (Event-Driven)

- Single-threaded

- Cooperative multitasking

- Concurrent Programming

| Keyword | Description |

|---|---|

async def | Defines a coroutine function |

await | Waits for coroutine completion |

asyncio.create_task() | Schedules a coroutine for execution |

asyncio.gather() | Runs multiple coroutines concurrently |

asyncio.run() | Runs the async program |

asyncio.sleep() | Non-blocking sleep operation |

asyncio.Queue() | Queue for async communication |

asyncio.Event() | Event synchronization between coroutines |

async without await: Your function won’t be recognized as a coroutine await without async: You’ll get a syntax error

git clone https://github.com/gchandra10/python_concurrent_pgmming_examples.git